Modeling materials using density functional theory

Copyright \copyright 2012--\the\year\ John Kitchin\par Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled "GNU Free Documentation License".

Table of Contents

- 1. Introduction to this book

- 2. Introduction to DFT

- 3. Molecules

- 3.1. Defining and visualizing molecules

- 3.2. Simple properties

- 3.3. Simple properties that require single computations

- 3.4. Geometry optimization

- 3.5. Vibrational frequencies

- 3.6. Simulated infrared spectra

- 3.7. Thermochemical properties of molecules

- 3.8. Molecular reaction energies

- 3.9. Molecular reaction barriers

- 4. Bulk systems

- 4.1. Defining and visualizing bulk systems

- 4.2. Computational parameters that are important for bulk structures

- 4.3. Determining bulk structures

- 4.4. TODO Using built-in ase optimization with vasp

- 4.5. Cohesive energy

- 4.6. Elastic properties

- 4.7. Bulk thermodynamics

- 4.8. Effect of pressure on phase stability

- 4.9. Bulk reaction energies

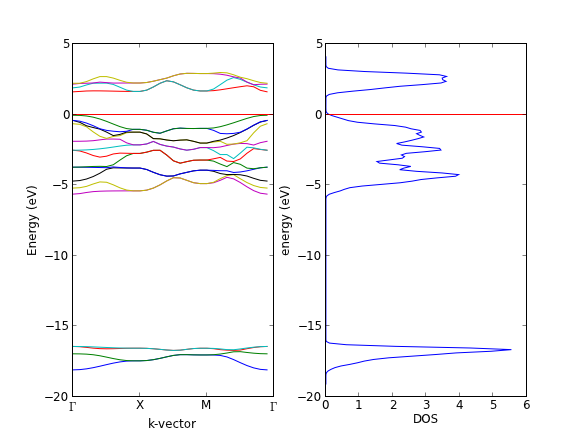

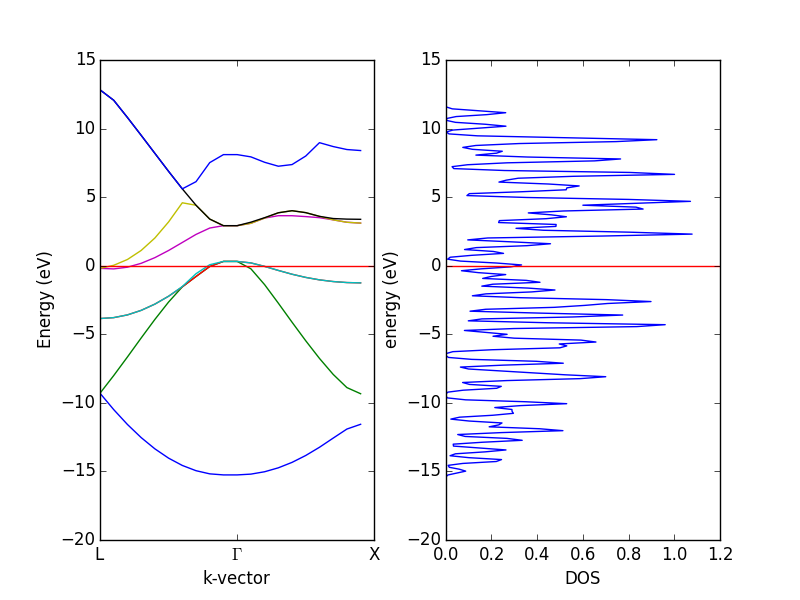

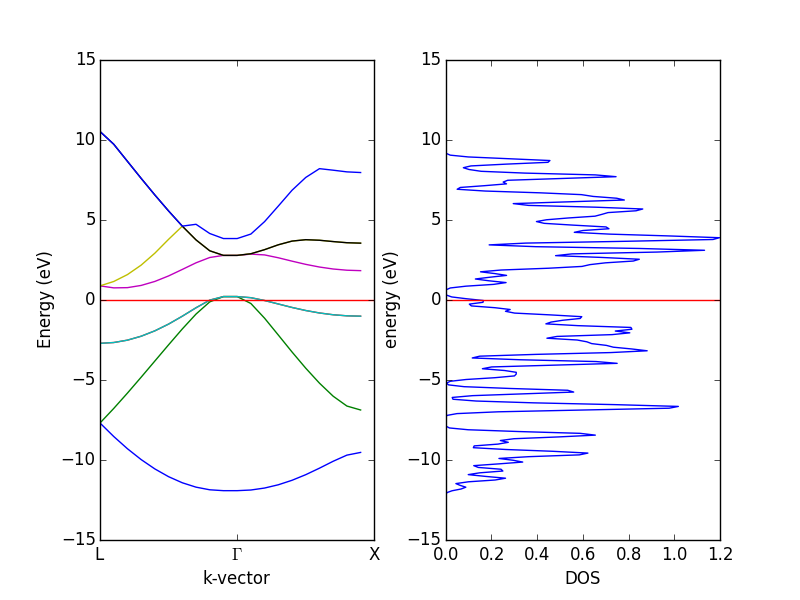

- 4.10. Bulk density of states

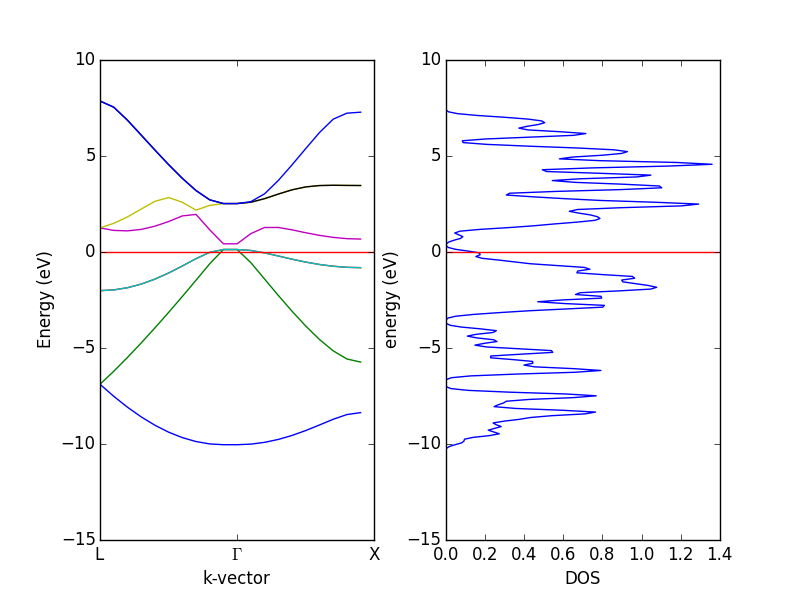

- 4.11. Atom projected density of states

- 4.12. Band structures

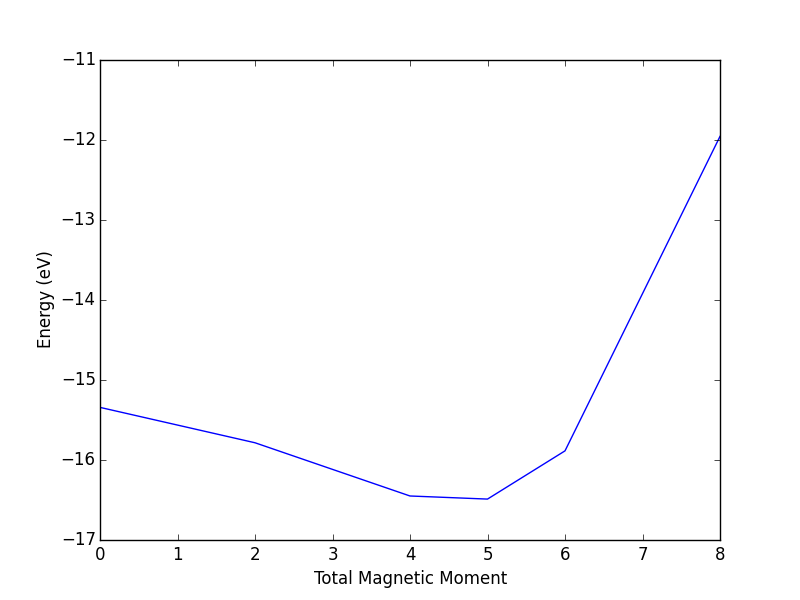

- 4.13. Magnetism

- 4.14. TODO phonons

- 4.15. TODO solid state NEB

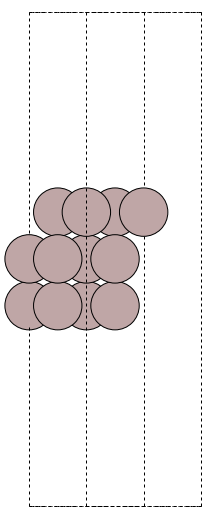

- 5. Surfaces

- 6. Atomistic thermodynamics

- 7. Advanced electronic structure methods

- 8. Databases in molecular simulations

- 9. Acknowledgments

- 10. Appendices

- 11. Python

- 12. References

- 13. GNU Free Documentation License

- 14. Index

1 Introduction to this book

This book serves two purposes: 1) to provide worked examples of using DFT to model materials properties, and 2) to provide references to more advanced treatments of these topics in the literature. It is not a definitive reference on density functional theory. Along the way to learning how to perform the calculations, you will learn how to analyze the data, make plots, and how to interpret the results. This book is very much "recipe" oriented, with the intention of giving you enough information and knowledge to start your research. In that sense, many of the computations are not publication quality with respect to convergence of calculation parameters.

You will read a lot of python code in this book. I believe that computational work should always be scripted. Scripting provides a written record of everything you have done, making it more probable you (or others) could reproduce your results or report the method of its execution exactly at a later time.

This book makes heavy use of many computational tools including:

- Python

- Atomic Simulation Environment (ase)

- numpy

- scipy

- matplotlib

- emacs

- org-mode This book is written in org-mode, and is best read in emacs in org-mode. This format provides clickable links, easy navigation, syntax highlighting, as well as the ability to interact with the tables and code. The book is also available in PDF.

- git This book is available at https://github.com/jkitchin/dft-book

- vasp This is the Python module used extensively here. It is available at https://github.com/jkitchin/vasp

The DFT code used primarily in this book is VASP.

Similar code would be used for other calculators, e.g. GPAW, Jacapo, etc… you would just have to import the python modules for those codes, and replace the code that defines the calculator.

Review all the hyperlinks in this chapter.

2 Introduction to DFT

A comprehensive overview of DFT is beyond the scope of this book, as excellent reviews on these subjects are readily found in the literature, and are suggested reading in the following paragraph. Instead, this chapter is intended to provide a useful starting point for a non-expert to begin learning about and using DFT in the manner used in this book. Much of the information presented here is standard knowledge among experts, but a consequence of this is that it is rarely discussed in current papers in the literature. A secondary goal of this chapter is to provide new users with a path through the extensive literature available and to point out potential difficulties and pitfalls in these calculations.

A modern and practical introduction to density functional theory can be found in Sholl and Steckel sholl-2009-densit-funct-theor. A fairly standard textbook on DFT is the one written by Parr and Yang parr-yang. The Chemist's Guide to DFT koch2001 is more readable and contains more practical information for running calculations, but both of these books focus on molecular systems. The standard texts in solid state physics are by Kittel kittel and Ashcroft and Mermin ashcroft-mermin. Both have their fine points, the former being more mathematically rigorous and the latter more readable. However, neither of these books is particularly easy to relate to chemistry. For this, one should consult the exceptionally clear writings of Roald Hoffman hoffmann1987,RevModPhys.60.601, and follow these with the work of N\o rskov and coworkers hammer2000:adv-cat,greeley2002:elect.

In this chapter, only the elements of DFT that are relevant to this work will be discussed. An excellent review on other implementations of DFT can be found in Reference freeman1995:densit, and details on the various algorithms used in DFT codes can be found in Refs. payne1992:iterat,Kresse199615.

One of the most useful sources of information has been the dissertations of other students, perhaps because the difficulties they faced in learning the material are still fresh in their minds. Thomas Bligaard, a coauthor of Dacapo, wrote a particularly relevant thesis on exchange/correlation functionals bligaard2000:exchan-correl-funct and a dissertation illustrating the use of DFT to design new alloys with desirable thermal and mechanical properties bligaard2003:under-mater-proper-basis-densit. The Ph.D. thesis of Ari Seitsonen contains several useful appendices on k-point setups, and convergence tests of calculations, in addition to a thorough description of DFT and analysis of calculation output seitsonen2000:phd. Finally, another excellent overview of DFT and its applications to bimetallic alloy phase diagrams and surface reactivity is presented in the PhD thesis of Robin Hirschl hirschl2002:binar-trans-metal-alloy-their-surfac.

2.1 Background

In 1926, Erwin Schrödinger published the first accounts of his now famous wave equation pauling1963. He later shared the Nobel prize with Paul A. M. Dirac in 1933 for this discovery. Schrödinger's wave function seemed extremely promising, as it contains all of the information available about a system. Unfortunately, most practical systems of interest consist of many interacting electrons, and the effort required to find solutions to Schrödinger's equation increases exponentially with the number of electrons, limiting this approach to systems with a small number of relevant electrons, \(N \lesssim O(10)\) RevModPhys.71.1253. Even if this rough estimate is off by an order of magnitude, a system with 100 electrons is still very small, for example, two Ru atoms if all the electrons are counted, or perhaps ten Pt atoms if only the valence electrons are counted. Thus, the wave function method, which has been extremely successful in studying the properties of small molecules, is unsuitable for studies of large, extended solids. Interestingly, this difficulty was recognized by Dirac as early as 1929, when he wrote "The underlying physical laws necessary for the mathematical theory of a large part of physics and the whole of chemistry are thus completely known, and the difficulty is only that the application of these laws leads to equations much too complicated to be soluble." dirac1929:quant-mechan-many-elect-system.

In 1964, Hohenberg and Kohn showed that the ground state total energy of a system of interacting electrons is a unique functional of the electron density PhysRev.136.B864. By definition, a function returns a number when given a number. For example, in \(f(x)=x^2\), \(f(x)\) is the function, and it equals four when \(x=2\). A functional returns a number when given a function. Thus, in \(g(f(x))=\int_0^\pi f(x) dx\), \(g(f(x))\) is the functional, and it is equal to two when \(f(x)=\sin(x)\). Hohenberg and Kohn further identified a variational principle that appeared to reduce the problem of finding the ground state energy of an electron gas in an external potential (i.e., in the presence of ion cores) to that of the minimization of a functional of the three-dimensional density function. Unfortunately, the definition of the functional involved a set of 3N-dimensional trial wave functions.

In 1965, Kohn and Sham made a significant breakthrough when they showed that the problem of many interacting electrons in an external potential can be mapped exactly to a set of noninteracting electrons in an effective external potential PhysRev.140.A1133. This led to a set of self-consistent, single particle equations known as the Kohn-Sham (KS) equations:

with

where \(v(\mathbf{r})\) is the external potential and \(v_{xc}(\mathbf{r})\) is the exchange-correlation potential, which depends on the entire density function. Thus, the density needs to be known in order to define the effective potential so that Eq. \eqref{eq:KS} can be solved. \(\varphi_j(\mathbf{r})\) corresponds to the \(j^{th}\) KS orbital of energy \(\epsilon_j\).

The ground state density is given by:

To solve Eq. \eqref{eq:KS} then, an initial guess is used for \(\varphi_j(r)\) which is used to generate Eq. \eqref{eq:density}, which is subsequently used in Eq. \eqref{eq:veff}. This equation is then solved for \(\varphi_j(\mathbf{r})\) iteratively until the \(\varphi_j(\mathbf{r})\) that result from the solution are the same as the \(\varphi_j(\mathbf{r})\) that are used to define the equations, that is, the solutions are self-consistent. Finally, the ground state energy is given by:

where \(E_{xc}[n(\mathbf{r})]\) is the exchange-correlation energy functional. Walter Kohn shared the Nobel prize in Chemistry in 1998 for this work RevModPhys.71.1253. The other half of the prize went to John Pople for his efforts in wave function based quantum mechanical methods RevModPhys.71.1267. Provided the exchange-correlation energy functional is known, Eq. (eq:dftEnergy) is exact. However, the exact form of the exchange-correlation energy functional is not known, thus approximations for this functional must be used.

2.2 Exchange correlation functionals

The two main types of exchange/correlation functionals used in DFT are the local density approximation (LDA) and the generalized gradient approximation (GGA). In the LDA, the exchange-correlation functional is defined for an electron in a uniform electron gas of density \(n\) PhysRev.140.A1133. It is exact for a uniform electron gas, and is anticipated to be a reasonable approximation for slowly varying densities. In molecules and solids, however, the density tends to vary substantially in space. Despite this, the LDA has been very successfully used in many systems. It tends to predict overbonding in both molecular and solid systems fuchs1998:pseud, and it tends to make semiconductor systems too metallic (the band gap problem) perdew1982:elect-kohn-sham.

The generalized gradient approximation includes corrections for gradients in the electron density, and is often implemented as a corrective function of the LDA. The form of this corrective function, or "exchange enhancement" function determines which functional it is, e.g. PBE, RPBE, revPBE, etc. hammer1999:improv-pbe. In this book the PBE GGA functional is used the most. N\o{}rskov and coworkers have found that the RPBE functional gives superior chemisorption energies for atomic and molecular bonding to surfaces, but that it gives worse bulk properties, such as lattice constants compared to experimental data hammer1999:improv-pbe.

Finally, there are increasingly new types of functionals in the literature. The so-called hybrid functionals, such as B3LYP, are more popular with gaussian basis sets (e.g. in Gaussian), but they are presently inefficient with planewave basis sets. None of these other types of functionals were used in this work. For more details see Chapter 6 in Ref. koch2001 and Thomas Bligaard's thesis on exchange and correlation functionals bligaard2000:exchan-correl-funct.

2.3 Basis sets

Briefly, VASP utilizes planewaves as the basis set to expand the Kohn-Sham orbitals. In a periodic solid, one can use Bloch's theorem to show that the wave function for an electron can be expressed as the product of a planewave and a function with the periodicity of the lattice ashcroft-mermin:

\begin{equation} \psi_{n\mathbf{k}}(\mathbf{r})=\exp({i\mathbf{k}\cdot\mathbf{r}})u_{n\mathbf{k}}(\mathbf{r}) \end{equation}where \(\mathbf{r}\) is a position vector, and \(\mathbf{k}\) is a so-called wave vector that will only have certain allowed values defined by the size of the unit cell. Bloch's theorem sets the stage for using planewaves as a basis set, because it suggests a planewave character of the wave function. If the periodic function \(u_{n\mathbf{k}}(\mathbf{r})\) is also expanded in terms of planewaves determined by wave vectors of the reciprocal lattice vectors, \(\mathbf{G}\), then the wave function can be expressed completely in terms of a sum of planewaves payne1992:iterat:

\begin{equation} \psi_i(\mathbf{r})=\sum_\mathbf{G} c_{i,\mathbf{k+G}} \exp(i\mathbf{(k+G)\cdot r}). \end{equation}where \(c_{i,\mathbf{k+G}}\) are now coefficients that can be varied to determine the lowest energy solution. This also converts Eq. \eqref{eq:KS} from an integral equation to a set of algebraic equations that can readily be solved using matrix algebra.

In aperiodic systems, such as systems with even one defect, or randomly ordered alloys, there is no periodic unit cell. Instead one must represent the portion of the system of interest in a supercell, which is then subjected to the periodic boundary conditions so that a planewave basis set can be used. It then becomes necessary to ensure the supercell is large enough to avoid interactions between the defects in neighboring supercells. The case of the randomly ordered alloy is virtually hopeless as the energy of different configurations will fluctuate statistically about an average value. These systems were not considered in this work, and for more detailed discussions the reader is referred to Ref. makov1995:period-bound-condit. Once a supercell is chosen, however, Bloch's theorem can be applied to the new artificially periodic system.

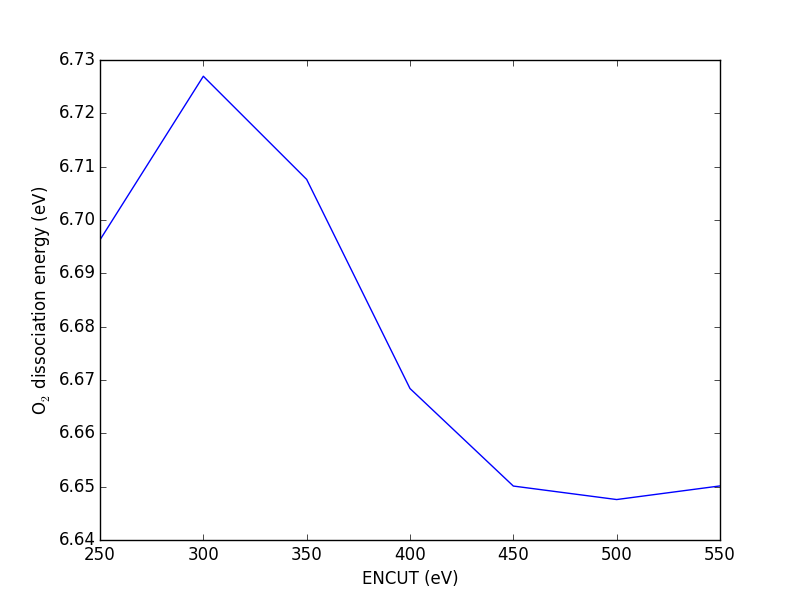

To get a perfect expansion, one needs an infinite number of planewaves. Luckily, the coefficients of the planewaves must go to zero for high energy planewaves, otherwise the energy of the wave function would go to infinity. This provides justification for truncating the planewave basis set above a cutoff energy. Careful testing of the effect of the cutoff energy on the total energy can be done to determine a suitable cutoff energy. The cutoff energy required to obtain a particular convergence precision is also element dependent, shown in Table tab:pwcut. It can also vary with the "softness" of the pseudopotential. Thus, careful testing should be done to ensure the desired level of convergence of properties in different systems. Table tab:pwcut refers to convergence of total energies. These energies are rarely considered directly, it is usually differences in energy that are important. These tend to converge with the planewave cutoff energy much more quickly than total energies, due to cancellations of convergence errors. In this work, 350 eV was found to be suitable for the H adsorption calculations, but a cutoff energy of 450 eV was required for O adsorption calculations.

| Precision | Low | High |

|---|---|---|

| Mo | 168 | 293 |

| O | 300 | 520 |

| O_sv | 1066 | 1847 |

Bloch's theorem eliminates the need to calculate an infinite number of wave functions, because there are only a finite number of electrons in the unit (super) cell. However, there are still an infinite number of discrete k points that must be considered, and the energy of the unit cell is calculated as an integral over these points. It turns out that wave functions at k points that are close together are similar, thus an interpolation scheme can be used with a finite number of k points. This also converts the integral used to determine the energy into a sum over the k points, which are suitably weighted to account for the finite number of them. There will be errors in the total energy associated with the finite number of k, but these can be reduced and tested for convergence by using higher k-point densities. An excellent discussion of this for aperiodic systems can be found in Ref. makov1995:period-bound-condit.

The most common schemes for generating k points are the Chadi-Cohen scheme PhysRevB.8.5747, and the Monkhorst-Pack scheme PhysRevB.13.5188. The use of these k point setups amounts to an expansion of the periodic function in reciprocal space, which allows a straight-forward interpolation of the function between the points that is more accurate than with other k point generation schemes PhysRevB.13.5188.

2.4 Pseudopotentials

The core electrons of an atom are computationally expensive with planewave basis sets because they are highly localized. This means that a very large number of planewaves are required to expand their wave functions. Furthermore, the contributions of the core electrons to bonding compared to those of the valence electrons is usually negligible. In fact, the primary role of the core electron wave functions is to ensure proper orthogonality between the valence electrons and core states. Consequently, it is desirable to replace the atomic potential due to the core electrons with a pseudopotential that has the same effect on the valence electrons PhysRevB.43.1993. There are essentially two kinds of pseudopotentials, norm-conserving soft pseudopotentials PhysRevB.43.1993 and Vanderbilt ultrasoft pseudopotentials PhysRevB.41.7892. In either case, the pseudopotential function is generated from an all-electron calculation of an atom in some reference state. In norm-conserving pseudopotentials, the charge enclosed in the pseudopotential region is the same as that enclosed by the same space in an all-electron calculation. In ultrasoft pseudopotentials, this requirement is relaxed and charge augmentation functions are used to make up the difference. As its name implies, this allows a "softer" pseudopotential to be generated, which means fewer planewaves are required to expand it.

The pseudopotentials are not unique, and calculated properties depend on them. However, there are standard methods for ensuring the quality and transferability (to different chemical environments) of the pseudopotentials PhysRevB.56.15629.

nil

VASP provides a database of PAW potentials PhysRevB.50.17953,PhysRevB.59.1758.

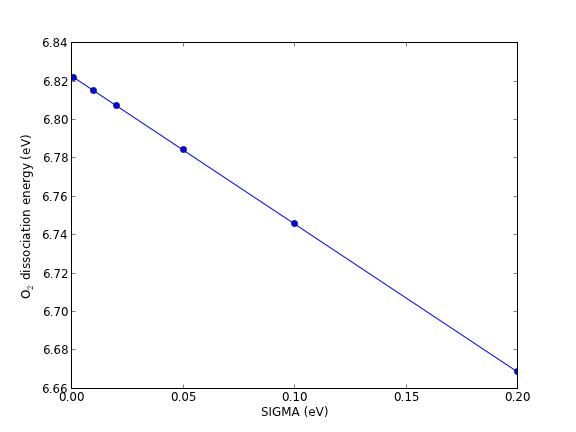

2.5 Fermi Temperature and band occupation numbers

At absolute zero, the occupancies of the bands of a system are well-defined step functions; all bands up to the Fermi level are occupied, and all bands above the Fermi level are unoccupied. There is a particular difficulty in the calculation of the electronic structures of metals compared to semiconductors and molecules. In molecules and semiconductors, there is a clear energy gap between the occupied states and unoccupied states. Thus, the occupancies are insensitive to changes in the energy that occur during the self-consistency cycles. In metals, however, the density of states is continuous at the Fermi level, and there are typically a substantial number of states that are close in energy to the Fermi level. Consequently, small changes in the energy can dramatically change the occupation numbers, resulting in instabilities that make it difficult to converge to the occupation step function. A related problem is that the Brillouin zone integral (which in practice is performed as a sum over a finite number of k points) that defines the band energy converges very slowly with the number of k points due to the discontinuity in occupancies in a continuous distribution of states for metals gillan1989:calcul,Kresse199615. The difficulty arises because the temperature in most DFT calculations is at absolute zero. At higher temperatures, the DOS is smeared across the Fermi level, resulting in a continuous occupation function over the distribution of states. A finite-temperature version of DFT was developed PhysRev.137.A1441, which is the foundation on which one solution to this problem is based. In this solution, the step function is replaced by a smoothly varying function such as the Fermi-Dirac function at a small, but non-zero temperature Kresse199615. The total energy is then extrapolated back to absolute zero.

2.6 Spin polarization and magnetism

There are two final points that need to be discussed about these calculations, spin polarization and dipole corrections. Spin polarization is important for systems that contain net spin. For example, iron, cobalt and nickel are magnetic because they have more electrons with spin "up" than spin "down" (or vice versa). Spin polarization must also be considered in atoms and molecules with unpaired electrons, such as hydrogen and oxygen atoms, oxygen molecules and radicals. For example, there are two spin configurations for an oxygen molecule, the singlet state with no unpaired electrons, and the triplet state with two unpaired electrons. The oxygen triplet state is lower in energy than the oxygen singlet state, and thus it corresponds to the ground state for an oxygen atom. A classically known problem involving spin polarization is the dissociation of a hydrogen molecule. In this case, the molecule starts with no net spin, but it dissociates into two atoms, each of which has an unpaired electron. See section 5.3.5 in Reference koch2001 for more details on this.

In VASP, spin polarization is not considered by default; it must be turned on, and an initial guess for the magnetic moment of each atom in the unit cell must be provided (typically about one Bohr-magneton per unpaired electron). For Fe, Co, and Ni, the experimental values are 2.22, 1.72, and 0.61 Bohr-magnetons, respectively kittel and are usually good initial guesses. See Reference PhysRevB.56.15629 for a very thorough discussion of the determination of the magnetic properties of these metals with DFT. For a hydrogen atom, an initial guess of 1.0 Bohr-magnetons (corresponding to one unpaired electron) is usually good. An oxygen atom has two unpaired electrons, thus an initial guess of 2.0 Bohr-magnetons should be used. The spin-polarized solution is sensitive to the initial guess, and typically converges to the closest solution. Thus, a magnetic initial guess usually must be provided to get a magnetic solution. Finally, unless an adsorbate is on a magnetic metal surface, spin polarization typically does not need to be considered, although the gas-phase reference state calculation may need to be done with spin-polarization.

The downside of including spin polarization is that it essentially doubles the calculation time.

2.7 Recommended reading

The original papers on DFT are PhysRev.136.B864,PhysRev.140.A1133.

Kohn's Nobel Lecture RevModPhys.71.1253 and Pople's Nobel Lecture RevModPhys.71.1267 are good reads.

This paper by Hoffman RevModPhys.60.601 is a nice review of solid state physics from a chemist's point of view.

All calculations in this book were performed using VASP Kresse199615,PhysRevB.54.11169,PhysRevB.49.14251,PhysRevB.47.558 with the projector augmented wave (PAW) potentials provided in VASP.

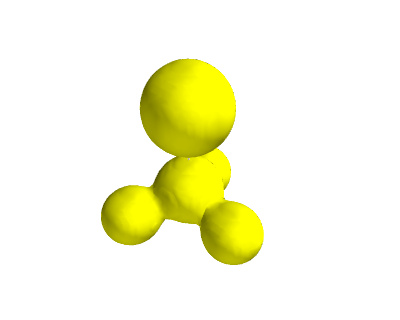

3 Molecules

In this chapter we consider how to construct models of molecules, how to manipulate them, and how to calculate many properties of molecules. For a nice comparison of VASP and Gaussian see paier:234102.

3.1 Defining and visualizing molecules

We start by learning how to define a molecule and visualize it. We will begin with defining molecules from scratch, then reading molecules from data files, and finally using some built-in databases in ase.

3.1.1 From scratch

When there is no data file for the molecule you want, or no database to get it from, you have to define your atoms geometry by hand. Here is how that is done for a CO molecule (Figure fig:co-origin). We must define the type and position of each atom, and the unit cell the atoms are in.

from ase import Atoms, Atom from ase.io import write # define an Atoms object atoms = Atoms([Atom('C', [0., 0., 0.]), Atom('O', [1.1, 0., 0.])], cell=(10, 10, 10)) print('V = {0:1.0f} Angstrom^3'.format(atoms.get_volume())) write('images/simple-cubic-cell.png', atoms, show_unit_cell=2)

V = 1000 Angstrom^3

Figure 2: Image of a CO molecule with the C at the origin. \label{fig:co-origin}

There are two inconvenient features of the simple cubic cell:

- Since the CO molecule is at the corner, its electron density is spread over the 8 corners of the box, which is not convenient for visualization later (see Visualizing electron density).

- Due to the geometry of the cube, you need fairly large cubes to make sure the electron density of the molecule does not overlap with that of its images. Electron-electron interactions are repulsive, and the overlap makes the energy increase significantly. Here, the CO molecule has 6 images due to periodic boundary conditions that are 10 Å away. The volume of the unit cell is 1000 Å\(^3\).

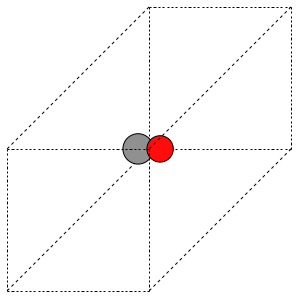

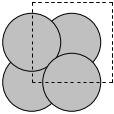

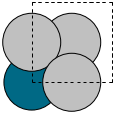

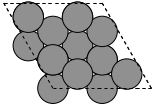

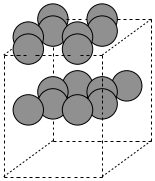

The first problem is easily solved by centering the atoms in the unit cell. The second problem can be solved by using a face-centered cubic lattice, which is the lattice with the closest packing. We show the results of the centering in Figure fig:co-fcc, where we have guessed values for \(b\) until the CO molecules are on average 10 Å apart. Note the final volume is only about 715 Å\(^3\), which is smaller than the cube. This will result in less computational time to compute properties.

from ase import Atoms, Atom from ase.io import write b = 7.1 atoms = Atoms([Atom('C', [0., 0., 0.]), Atom('O', [1.1, 0., 0.])], cell=[[b, b, 0.], [b, 0., b], [0., b, b]]) print('V = {0:1.0f} Ang^3'.format(atoms.get_volume())) atoms.center() # translate atoms to center of unit cell write('images/fcc-cell.png', atoms, show_unit_cell=2)

V = 716 Ang^3

Figure 3: CO in a face-centered cubic unit cell. \label{fig:co-fcc}

At this point you might ask, "How do you know the distance to the neighboring image?" The ag viewer lets you compute this graphically, but we can use code to determine this too. All we have to do is figure out the length of each lattice vector, because these are what separate the atoms in the images. We use the numpy module to compute the distance of a vector as the square root of the sum of squared elements.

from ase import Atoms, Atom import numpy as np b = 7.1 atoms = Atoms([Atom('C', [0., 0., 0.]), Atom('O', [1.1, 0., 0.])], cell=[[b, b, 0.], [b, 0., b], [0., b, b]]) # get unit cell vectors and their lengths (a1, a2, a3) = atoms.get_cell() print('|a1| = {0:1.2f} Ang'.format(np.sum(a1**2)**0.5)) print('|a2| = {0:1.2f} Ang'.format(np.linalg.norm(a2))) print('|a3| = {0:1.2f} Ang'.format(np.sum(a3**2)**0.5))

|a1| = 10.04 Ang |a2| = 10.04 Ang |a3| = 10.04 Ang

3.1.2 Reading other data formats into a calculation

ase.io.read supports many different file formats:

Known formats:

========================= ===========

format short name

========================= ===========

GPAW restart-file gpw

Dacapo netCDF output file dacapo

Old ASE netCDF trajectory nc

Virtual Nano Lab file vnl

ASE pickle trajectory traj

ASE bundle trajectory bundle

GPAW text output gpaw-text

CUBE file cube

XCrySDen Structure File xsf

Dacapo text output dacapo-text

XYZ-file xyz

VASP POSCAR/CONTCAR file vasp

VASP OUTCAR file vasp_out

SIESTA STRUCT file struct_out

ABINIT input file abinit

V_Sim ascii file v_sim

Protein Data Bank pdb

CIF-file cif

FHI-aims geometry file aims

FHI-aims output file aims_out

VTK XML Image Data vti

VTK XML Structured Grid vts

VTK XML Unstructured Grid vtu

TURBOMOLE coord file tmol

TURBOMOLE gradient file tmol-gradient

exciting input exi

AtomEye configuration cfg

WIEN2k structure file struct

DftbPlus input file dftb

CASTEP geom file cell

CASTEP output file castep

CASTEP trajectory file geom

ETSF format etsf.nc

DFTBPlus GEN format gen

CMR db/cmr-file db

CMR db/cmr-file cmr

LAMMPS dump file lammps

Gromacs coordinates gro

========================= ===========

You can read XYZ file format to create ase.Atoms objects. Here is what an XYZ file format might look like:

#+include: molecules/isobutane.xyz

The first line is the number of atoms in the file. The second line is often a comment. What follows is one line per atom with the symbol and Cartesian coordinates in Å. Note that the XYZ format does not have unit cell information in it, so you will have to figure out a way to provide it. In this example, we center the atoms in a box with vacuum on all sides (Figure fig:isobutane).

from ase.io import read, write atoms = read('molecules/isobutane.xyz') atoms.center(vacuum=5) write('images/isobutane-xyz.png', atoms, show_unit_cell=2)

Figure 4: An isobutane molecule read in from an XYZ formatted data file. \label{fig:isobutane}

3.1.3 Predefined molecules

ase defines a number of molecular geometries in the ase.data.molecules database. For example, the database includes the molecules in the G2/97 database curtiss:1063. This database contains a broad set of atoms and molecules for which good experimental data exists, making them useful for benchmarking studies. See this site for the original files.

The coordinates for the atoms in the database are MP2(full)/6-31G(d) optimized geometries. Here is a list of all the species available in ase.data.g2. You may be interested in reading about some of the other databases in ase.data too.

from ase.data import g2 keys = g2.data.keys() # print in 3 columns for i in range(len(keys) / 3): print('{0:25s}{1:25s}{2:25s}'.format(*tuple(keys[i * 3: i * 3 + 3])))

isobutene CH3CH2OH CH3COOH COF2 CH3NO2 CF3CN CH3OH CCH CH3CH2NH2 PH3 Si2H6 O3 O2 BCl3 CH2_s1A1d Be H2CCl2 C3H9C C3H9N CH3CH2OCH3 BF3 CH3 CH4 S2 C2H6CHOH SiH2_s1A1d H3CNH2 CH3O H BeH P C3H4_C3v C2F4 OH methylenecyclopropane F2O SiCl4 HCF3 HCCl3 C3H7 CH3CH2O AlF3 CH2NHCH2 SiH2_s3B1d H2CF2 SiF4 H2CCO PH2 OCS HF NO2 SH2 C3H4_C2v H2O2 CH3CH2Cl isobutane CH3COF HCOOH CH3ONO C5H8 2-butyne SH NF3 HOCl CS2 P2 C CH3S O C4H4S S C3H7Cl H2CCHCl C2H6 CH3CHO C2H4 HCN C2H2 C2Cl4 bicyclobutane H2 C6H6 N2H4 C4H4NH H2CCHCN H2CCHF cyclobutane HCl CH3OCH3 Li2 Na CH3SiH3 NaCl CH3CH2SH OCHCHO SiH4 C2H5 SiH3 NH ClO AlCl3 CCl4 NO C2H3 ClF HCO CH3CONH2 CH2SCH2 CH3COCH3 C3H4_D2d CH CO CN F CH3COCl N CH3Cl Si C3H8 CS N2 Cl2 NCCN F2 CO2 Cl CH2OCH2 H2O CH3CO SO HCOOCH3 butadiene ClF3 Li PF3 B CH3SH CF4 C3H6_Cs C2H6NH N2O LiF H2COH cyclobutene LiH SiO Si2 C2H6SO C5H5N trans-butane Na2 C4H4O SO2 NH3 NH2 CH2_s3B1d ClNO C3H6_D3h Al CH3SCH3 H2CO CH3CN

Some other databases include the ase.data.s22 for weakly interacting dimers and complexes, and ase.data.extra_molecules which has a few extras like biphenyl and C60.

Here is an example of getting the geometry of an acetonitrile molecule and writing an image to a file. Note that the default unit cell is a 1 Å × Å × Å cubic cell. That is too small to use if your calculator uses periodic boundary conditions. We center the atoms in the unit cell and add vacuum on each side. We will add 6 Å of vacuum on each side. In the write command we use the option show_unit_cell =2 to draw the unit cell boundaries. See Figure fig:ch3cn.

from ase.structure import molecule from ase.io import write atoms = molecule('CH3CN') atoms.center(vacuum=6) print('unit cell') print('---------') print(atoms.get_cell()) write('images/ch3cn.png', atoms, show_unit_cell=2)

unit cell --------- [[ 13.775328 0. 0. ] [ 0. 13.537479 0. ] [ 0. 0. 15.014576]]

Figure 5: A CH3CN molecule in a box. \label{fig:ch3cn}

It is possible to rotate the atoms with ase.io.write if you wanted to see pictures from another angle. In the next example we rotate 45 degrees about the $x$-axis, then 45 degrees about the $y$-axis. Note that this only affects the image, not the actual coordinates. See Figure fig:ch3cn-rot.

from ase.structure import molecule from ase.io import write atoms = molecule('CH3CN') atoms.center(vacuum=6) print('unit cell') print('---------') print(atoms.get_cell()) write('images/ch3cn-rotated.png', atoms, show_unit_cell=2, rotation='45x,45y,0z')

unit cell --------- [[ 13.775328 0. 0. ] [ 0. 13.537479 0. ] [ 0. 0. 15.014576]]

Figure 6: The rotated version of CH3CN. \label{fig:ch3cn-rot}

If you actually want to rotate the coordinates, there is a nice way to do that too, with the ase.Atoms.rotate method. Actually there are some subtleties in rotation. One rotates the molecule an angle (in radians) around a vector, but you have to choose whether the center of mass should be fixed or not. You also must decide whether or not the unit cell should be rotated. In the next example you can see the coordinates have changed due to the rotations. Note that the write function uses the rotation angle in degrees, while the rotate function uses radians.

from ase.structure import molecule from ase.io import write from numpy import pi atoms = molecule('CH3CN') atoms.center(vacuum=6) p1 = atoms.get_positions() atoms.rotate('x', pi/4, center='COM', rotate_cell=False) atoms.rotate('y', pi/4, center='COM', rotate_cell=False) write('images/ch3cn-rotated-2.png', atoms, show_unit_cell=2) print('difference in positions after rotating') print('atom difference vector') print('--------------------------------------') p2 = atoms.get_positions() diff = p2 - p1 for i, d in enumerate(diff): print('{0} {1}'.format(i, d))

difference in positions after rotating atom difference vector -------------------------------------- 0 [-0.65009456 0.91937255 0.65009456] 1 [ 0.08030744 -0.11357187 -0.08030744] 2 [ 0.66947344 -0.94677841 -0.66947344] 3 [-0.32532156 0.88463727 1.35030756] 4 [-1.35405183 1.33495444 -0.04610517] 5 [-0.8340703 1.33495444 1.2092413 ]

Figure 7: Rotated CH3CN molecule

Note in this last case the unit cell is oriented differently than the previous example, since we chose not to rotate the unit cell.

3.1.4 Combining Atoms objects

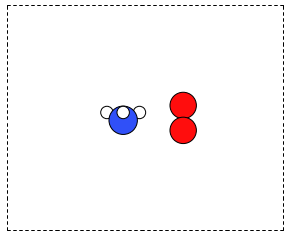

It is frequently useful to combine two Atoms objects, e.g. for computing reaction barriers, or other types of interactions. In ase, we simply add two Atoms objects together. Here is an example of getting an ammonia and oxygen molecule in the same unit cell. See Figure fig:combined-atoms. We set the Atoms about three Å apart using the ase.Atoms.translate function.

from ase.structure import molecule from ase.io import write atoms1 = molecule('NH3') atoms2 = molecule('O2') atoms2.translate([3, 0, 0]) bothatoms = atoms1 + atoms2 bothatoms.center(5) write('images/bothatoms.png', bothatoms, show_unit_cell=2, rotation='90x')

Figure 8: Image featuring ammonia and oxygen molecule in one unit cell. \label{fig:combined-atoms}

3.2 Simple properties

Simple properties do not require a DFT calculation. They are typically only functions of the atom types and geometries.

3.2.1 Getting cartesian positions

If you want the \((x,y,z)\) coordinates of the atoms, use the ase.Atoms.get_positions. If you are interested in the fractional coordinates, use ase.Atoms.get_scaled_positions.

from ase.structure import molecule atoms = molecule('C6H6') # benzene # access properties on each atom print(' # sym p_x p_y p_z') print('------------------------------') for i, atom in enumerate(atoms): print('{0:3d}{1:^4s}{2:-8.2f}{3:-8.2f}{4:-8.2f}'.format(i, atom.symbol, atom.x, atom.y, atom.z)) # get all properties in arrays sym = atoms.get_chemical_symbols() pos = atoms.get_positions() num = atoms.get_atomic_numbers() atom_indices = range(len(atoms)) print() print(' # sym at# p_x p_y p_z') print('-------------------------------------') for i, s, n, p in zip(atom_indices, sym, num, pos): px, py, pz = p print('{0:3d}{1:>3s}{2:8d}{3:-8.2f}{4:-8.2f}{5:-8.2f}'.format(i, s, n, px, py, pz))

# sym p_x p_y p_z ------------------------------ 0 C 0.00 1.40 0.00 1 C 1.21 0.70 0.00 2 C 1.21 -0.70 0.00 3 C 0.00 -1.40 0.00 4 C -1.21 -0.70 0.00 5 C -1.21 0.70 0.00 6 H 0.00 2.48 0.00 7 H 2.15 1.24 0.00 8 H 2.15 -1.24 0.00 9 H 0.00 -2.48 0.00 10 H -2.15 -1.24 0.00 11 H -2.15 1.24 0.00 () # sym at# p_x p_y p_z ------------------------------------- 0 C 6 0.00 1.40 0.00 1 C 6 1.21 0.70 0.00 2 C 6 1.21 -0.70 0.00 3 C 6 0.00 -1.40 0.00 4 C 6 -1.21 -0.70 0.00 5 C 6 -1.21 0.70 0.00 6 H 1 0.00 2.48 0.00 7 H 1 2.15 1.24 0.00 8 H 1 2.15 -1.24 0.00 9 H 1 0.00 -2.48 0.00 10 H 1 -2.15 -1.24 0.00 11 H 1 -2.15 1.24 0.00

3.2.2 Molecular weight and molecular formula

molecular weight

We can quickly compute the molecular weight of a molecule with this recipe. We use ase.Atoms.get_masses to get an array of the atomic masses of each atom in the Atoms object, and then just sum them up.

from ase.structure import molecule atoms = molecule('C6H6') masses = atoms.get_masses() molecular_weight = masses.sum() molecular_formula = atoms.get_chemical_formula(mode='reduce') # note use of two lines to keep length of line reasonable s = 'The molecular weight of {0} is {1:1.2f} gm/mol' print(s.format(molecular_formula, molecular_weight))

The molecular weight of C6H6 is 78.11 gm/mol

Note that the argument reduce=True for ase.Atoms.get_chemical_formula collects all the symbols to provide a molecular formula.

3.2.3 Center of mass

center of mass The center of mass (COM) is defined as:

COM = \(\frac{\sum m_i \cdot r_i}{\sum m_i}\)

The center of mass is essentially the average position of the atoms, weighted by the mass of each atom. Here is an example of getting the center of mass from an Atoms object using ase.Atoms.get_center_of_mass.

from ase.structure import molecule import numpy as np # ammonia atoms = molecule('NH3') # cartesian coordinates print('COM1 = {0}'.format(atoms.get_center_of_mass())) # compute the center of mass by hand pos = atoms.positions masses = atoms.get_masses() COM = np.array([0., 0., 0.]) for m, p in zip(masses, pos): COM += m*p COM /= masses.sum() print('COM2 = {0}'.format(COM)) # one-line linear algebra definition of COM print('COM3 = {0}'.format(np.dot(masses, pos) / np.sum(masses)))

COM1 = [ 0.00000000e+00 5.91843349e-08 4.75457009e-02] COM2 = [ 0.00000000e+00 5.91843349e-08 4.75457009e-02] COM3 = [ 0.00000000e+00 5.91843349e-08 4.75457009e-02]

You can see see that these centers of mass, which are calculated by different methods, are the same.

3.2.4 Moments of inertia

moment of inertia The moment of inertia is a measure of resistance to changes in rotation. It is defined by \(I = \sum_{i=1}^N m_i r_i^2\) where \(r_i\) is the distance to an axis of rotation. There are typically three moments of inertia, although some may be zero depending on symmetry, and others may be degenerate. There is a convenient function to get the moments of inertia: ase.Atoms.get_moments_of_inertia. Here are several examples of molecules with different types of symmetry.:

from ase.structure import molecule print('linear rotors: I = [0 Ia Ia]') atoms = molecule('CO2') print(' CO2 moments of inertia: {}'.format(atoms.get_moments_of_inertia())) print('') print('symmetric rotors (Ia = Ib) < Ic') atoms = molecule('NH3') print(' NH3 moments of inertia: {}'.format(atoms.get_moments_of_inertia())) atoms = molecule('C6H6') print(' C6H6 moments of inertia: {}'.format(atoms.get_moments_of_inertia())) print('') print('symmetric rotors Ia < (Ib = Ic)') atoms = molecule('CH3Cl') print('CH3Cl moments of inertia: {}'.format(atoms.get_moments_of_inertia())) print('') print('spherical rotors Ia = Ib = Ic') atoms = molecule('CH4') print(' CH4 moments of inertia: {}'.format(atoms.get_moments_of_inertia())) print('') print('unsymmetric rotors Ia != Ib != Ic') atoms = molecule('C3H7Cl') print(' C3H7Cl moments of inertia: {}'.format(atoms.get_moments_of_inertia()))

linear rotors: I = [0 Ia Ia] CO2 moments of inertia: [ 0. 44.45384271 44.45384271] symmetric rotors (Ia = Ib) < Ic NH3 moments of inertia: [ 1.71012426 1.71012548 2.67031768] C6H6 moments of inertia: [ 88.77914641 88.77916799 177.5583144 ] symmetric rotors Ia < (Ib = Ic) CH3Cl moments of inertia: [ 3.20372189 37.97009644 37.97009837] spherical rotors Ia = Ib = Ic CH4 moments of inertia: [ 3.19145621 3.19145621 3.19145621] unsymmetric rotors Ia != Ib != Ic C3H7Cl moments of inertia: [ 19.41351508 213.18961963 223.16255537]

If you want to know the principle axes of rotation, we simply pass vectors=True to the function, and it returns the moments of inertia and the principle axes.

from ase.structure import molecule atoms = molecule('CH3Cl') moments, axes = atoms.get_moments_of_inertia(vectors=True) print('Moments = {0}'.format(moments)) print('axes = {0}'.format(axes))

Moments = [ 3.20372189 37.97009644 37.97009837] axes = [[ 0. 0. 1.] [ 0. 1. 0.] [ 1. 0. 0.]]

This shows the first moment is about the z-axis, the second moment is about the y-axis, and the third moment is about the x-axis.

3.2.5 Computing bond lengths and angles

A typical question we might ask is, "What is the structure of a molecule?" In other words, what are the bond lengths, angles between bonds, and similar properties. The Atoms object contains an ase.Atoms.get_distance method to make this easy. To calculate the distance between two atoms, you have to specify their indices, remembering that the index starts at 0.

from ase.structure import molecule # ammonia atoms = molecule('NH3') print('atom symbol') print('===========') for i, atom in enumerate(atoms): print('{0:2d} {1:3s}' .format(i, atom.symbol)) # N-H bond length s = 'The N-H distance is {0:1.3f} angstroms' print(s.format(atoms.get_distance(0, 1)))

atom symbol =========== 0 N 1 H 2 H 3 H The N-H distance is 1.017 angstroms

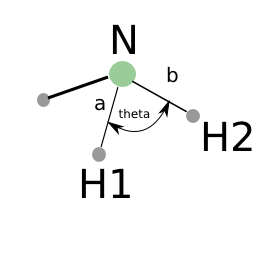

Bond angles are a little trickier. If we had vectors describing the directions between two atoms, we could use some simple trigonometry to compute the angle between the vectors: \(\vec{a} \cdot \vec{b} = |\vec{a}||\vec{b}| \cos(\theta)\). So we can calculate the angle as \(\theta = \arccos\left(\frac{\vec{a} \cdot \vec{b}}{|\vec{a}||\vec{b}|}\right)\), we just have to define our two vectors \(\vec{a}\) and \(\vec{b}\). We compute these vectors as the difference in positions of two atoms. For example, here we compute the angle H-N-H in an ammonia molecule. This is the angle between N-H\(_1\) and N-H\(_2\). In the next example, we utilize functions in numpy to perform the calculations, specifically the numpy.arccos function, the numpy.dot function, and numpy.linalg.norm functions.

from ase.structure import molecule # ammonia atoms = molecule('NH3') print('atom symbol') print('===========') for i, atom in enumerate(atoms): print('{0:2d} {1:3s}'.format(i, atom.symbol)) a = atoms.positions[0] - atoms.positions[1] b = atoms.positions[0] - atoms.positions[2] from numpy import arccos, dot, pi from numpy.linalg import norm theta_rad = arccos(dot(a, b) / (norm(a) * norm(b))) # in radians print('theta = {0:1.1f} degrees'.format(theta_rad * 180./pi))

atom symbol =========== 0 N 1 H 2 H 3 H theta = 106.3 degrees

Figure 9: Schematic of the vectors defining the H-N-H angle.

Alternatively you could use ase.Atoms.get_angle. Note we want the angle between atoms with indices [1, 0, 2] to get the H-N-H angle.

from ase.structure import molecule from numpy import pi # ammonia atoms = molecule('NH3') print('theta = {0} degrees'.format(atoms.get_angle([1, 0, 2]) * 180. / pi))

theta = 106.334624232 degrees

3.2.5.1 Dihedral angles

There is support in ase for computing dihedral angles. Let us illustrate that for ethane. We will compute the dihedral angle between atoms 5, 1, 0, and 4. That is a H-C-C-H dihedral angle, and one can visually see (although not here) that these atoms have a dihedral angle of 60° (Figure fig:ethane-dihedral).

# calculate an ethane dihedral angle from ase.structure import molecule import numpy as np atoms = molecule('C2H6') print('atom symbol') print('===========') for i, atom in enumerate(atoms): print('{0:2d} {1:3s}'.format(i, atom.symbol)) da = atoms.get_dihedral([5, 1, 0, 4]) * 180. / np.pi print('dihedral angle = {0:1.2f} degrees'.format(da))

atom symbol =========== 0 C 1 C 2 H 3 H 4 H 5 H 6 H 7 H dihedral angle = 60.00 degrees

In this section we covered properties that require simple calculations, but not DFT calculations, to compute.

In this section we covered properties that require simple calculations, but not DFT calculations, to compute.

3.3 Simple properties that require single computations

There are many properties that only require a single DFT calculation to obtain the energy, forces, density of states, electron density and electrostatic potential. This section describes some of these calculations and their analysis.

3.3.1 Energy and forces

Two of the most important quantities we are interested in are the total

energy and the forces on the atoms. To get these quantities, we have

to define a calculator and attach it to an ase.Atoms object so

that ase knows how to get the data. After defining the calculator a

DFT calculation must be run.

Here is an example of getting the energy and forces from a CO molecule. The forces in this case are very high, indicating that this geometry is not close to the ground state geometry. Note that the forces are only along the $x$-axis, which is along the molecular axis. We will see how to minimize this force in Manual determination and Automatic geometry optimization with VASP.

This is your first DFT calculation in the book! See ISMEAR, SIGMA, NBANDS, and ENCUT to learn more about these VASP keywords.

from ase import Atoms, Atom from vasp import Vasp co = Atoms([Atom('C', [0, 0, 0]), Atom('O', [1.2, 0, 0])], cell=(6., 6., 6.)) calc = Vasp('molecules/simple-co', # output dir xc='pbe', # the exchange-correlation functional nbands=6, # number of bands encut=350, # planewave cutoff ismear=1, # Methfessel-Paxton smearing sigma=0.01, # very small smearing factor for a molecule atoms=co) print('energy = {0} eV'.format(co.get_potential_energy())) print(co.get_forces())

energy = -14.69111507 eV [[ 5.09138064 0. 0. ] [-5.09138064 0. 0. ]]

We can see what files were created and used in this calculation by printing the vasp attribute of the calculator.

from vasp import Vasp print(Vasp('molecules/simple-co').vasp)

INCAR

-----

INCAR created by Atomic Simulation Environment

ENCUT = 350

LCHARG = .FALSE.

NBANDS = 6

ISMEAR = 1

LWAVE = .FALSE.

SIGMA = 0.01

POSCAR

------

C O

1.0000000000000000

6.0000000000000000 0.0000000000000000 0.0000000000000000

0.0000000000000000 6.0000000000000000 0.0000000000000000

0.0000000000000000 0.0000000000000000 6.0000000000000000

1 1

Cartesian

0.0000000000000000 0.0000000000000000 0.0000000000000000

1.2000000000000000 0.0000000000000000 0.0000000000000000

KPOINTS

-------

KPOINTS created by Atomic Simulation Environment

0

Monkhorst-Pack

1 1 1

0.0 0.0 0.0

POTCAR

------

cat $VASP_PP_PATH/potpaw_PBE/C/POTCAR $VASP_PP_PATH/potpaw_PBE/O/POTCAR > POTCAR

3.3.1.1 Running a job in parallel

from ase import Atoms, Atom from vasp import Vasp from vasp.vasprc import VASPRC VASPRC['queue.ppn'] = 4 co = Atoms([Atom('C', [0, 0, 0]), Atom('O', [1.2, 0, 0])], cell=(6., 6., 6.)) calc = Vasp('molecules/simple-co-n4', # output dir xc='PBE', # the exchange-correlation functional nbands=6, # number of bands encut=350, # planewave cutoff ismear=1, # Methfessel-Paxton smearing sigma=0.01, # very small smearing factor for a molecule atoms=co) print('energy = {0} eV'.format(co.get_potential_energy())) print(co.get_forces())

energy = -14.69072754 eV [[ 5.09089107 0. 0. ] [-5.09089107 0. 0. ]]

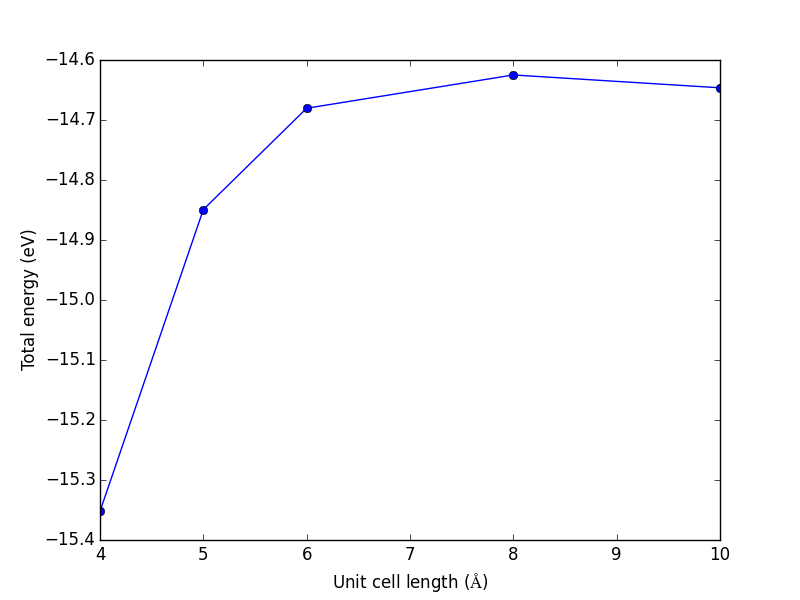

3.3.1.2 Convergence with unit cell size

There are a number of parameters that affect the energy and forces including the calculation parameters and the unit cell. We will first consider the effect of the unit cell on the total energy and forces. The reason that the unit cell affects the total energy is that it can change the distribution of electrons in the molecule.

from vasp import Vasp from ase import Atoms, Atom import numpy as np np.set_printoptions(precision=3, suppress=True) atoms = Atoms([Atom('C', [0, 0, 0]), Atom('O', [1.2, 0, 0])]) L = [4, 5, 6, 8, 10] energies = [] ready = True for a in L: atoms.set_cell([a, a, a], scale_atoms=False) atoms.center() calc = Vasp('molecules/co-L-{0}'.format(a), encut=350, xc='PBE', atoms=atoms) energies.append(atoms.get_potential_energy()) print(energies) calc.stop_if(None in energies) import matplotlib.pyplot as plt plt.plot(L, energies, 'bo-') plt.xlabel('Unit cell length ($\AA$)') plt.ylabel('Total energy (eV)') plt.savefig('images/co-e-v.png')

[-15.35943747, -14.85641864, -14.68750595, -14.63202061, -14.65342838]

Figure 10: Total energy of a CO molecule as a function of the unit cell length.

Here there are evidently attractive interactions between the CO molecules which lower the total energy for small box sizes. We have to decide what an appropriate volume for our calculation is, and the choice depends on the goal. We may wish to know the total energy of a molecule that is not interacting with any other molecules, e.g. in the ideal gas limit. In that case we need a large unit cell so the electron density from the molecule does not go outside the unit cell where it would overlap with neighboring images.

It pays to check for convergence. The cost of running the calculation goes up steeply with increasing cell size. Doubling a lattice vector here leads to a 20-fold increase in computational time! Note that doubling a lattice vector length increases the volume by a factor of 8 for a cube. The cost goes up because the number of planewaves that fit in the cube grows as the cube gets larger.

from vasp import Vasp L = [4, 5, 6, 8, 10] for a in L: calc = Vasp('molecules/co-L-{0}'.format(a)) print('{0} {1} seconds'.format(a, calc.get_elapsed_time()))

4 10.748 seconds 5 11.855 seconds 6 15.613 seconds 8 28.346 seconds 10 45.259 seconds

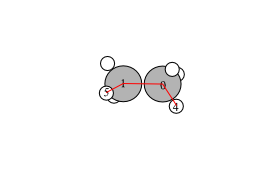

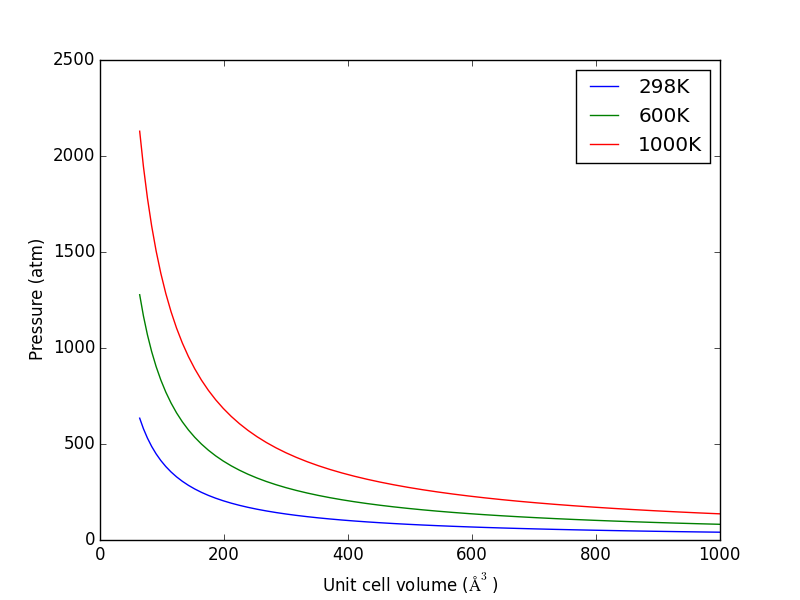

Let us consider what the pressure in the unit cell is. In the ideal gas limit we have \(PV = nRT\), which gives a pressure of zero at absolute zero. At non-zero temperatures, we have \(P=n/V RT\). Let us consider some examples. In atomic units we use \(k_B\) instead of \(R\).

from ase.units import kB, Pascal import numpy as np import matplotlib.pyplot as plt atm = 101325 * Pascal L = np.linspace(4, 10) V = L**3 n = 1 # one atom/molecule per unit cell for T in [298, 600, 1000]: P = n / V * kB * T / atm # convert to atmospheres plt.plot(V, P, label='{0}K'.format(T)) plt.xlabel('Unit cell volume ($\AA^3$)') plt.ylabel('Pressure (atm)') plt.legend(loc='best') plt.savefig('images/ideal-gas-pressure.png')

Figure 11: Ideal gas pressure dependence on temperature and unit cell volume.

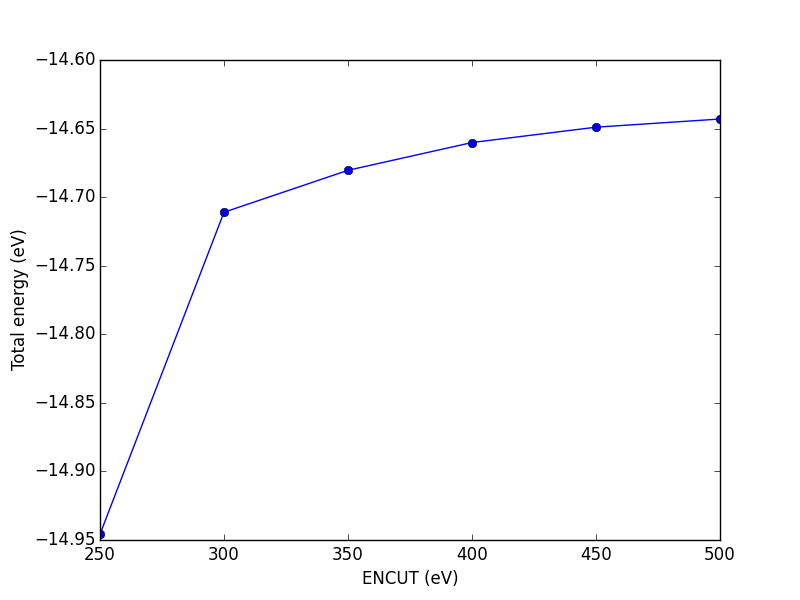

3.3.1.3 Convergence of ENCUT

The total energy and forces also depend on the computational parameters, notably ENCUT.

from ase import Atoms, Atom from vasp import Vasp import numpy as np np.set_printoptions(precision=3, suppress=True) atoms = Atoms([Atom('C', [0, 0, 0]), Atom('O', [1.2, 0, 0])], cell=(6, 6, 6)) atoms.center() ENCUTS = [250, 300, 350, 400, 450, 500] calcs = [Vasp('molecules/co-en-{0}'.format(en), encut=en, xc='PBE', atoms=atoms) for en in ENCUTS] energies = [calc.potential_energy for calc in calcs] print(energies) calcs[0].stop_if(None in energies) import matplotlib.pyplot as plt plt.plot(ENCUTS, energies, 'bo-') plt.xlabel('ENCUT (eV)') plt.ylabel('Total energy (eV)') plt.savefig('images/co-encut-v.png')

[-14.95250419, -14.71808896, -14.68750595, -14.66725733, -14.65604528, -14.65012078]

Figure 12: Dependence of the total energy of CO molecule on ENCUT.

You can see in this figure that it takes a cutoff energy of about 400 eV to achieve a convergence level around 10 meV, and that even at 500 meV the energy is still changing slightly. Keep in mind that we are generally interested in differences in total energy, and the differences tend to converge faster than a single total energy. Also it is important to note that it is usually a single element that determines the rate of convergence. The reason we do not just use very high ENCUT all the time is it is expensive.

grep "Elapsed time (sec):" molecules/co-en-*/OUTCAR

molecules/co-en-250/OUTCAR: Elapsed time (sec): 11.634 molecules/co-en-300/OUTCAR: Elapsed time (sec): 14.740 molecules/co-en-350/OUTCAR: Elapsed time (sec): 13.577 molecules/co-en-400/OUTCAR: Elapsed time (sec): 16.310 molecules/co-en-450/OUTCAR: Elapsed time (sec): 17.704 molecules/co-en-500/OUTCAR: Elapsed time (sec): 11.658

3.3.1.4 Cloning

You may want to clone a calculation, so you can change some parameter without losing the previous result. The clone function does this, and changes the calculator over to the new directory.

from ase import Atoms, Atom from vasp import Vasp calc = Vasp('molecules/simple-co') print('energy = {0} eV'.format(calc.get_atoms().get_potential_energy())) # This creates the directory and makes it current working directory calc.clone('molecules/clone-1') calc.set(encut=325) # this will trigger a new calculation print('energy = {0} eV'.format(calc.get_atoms().get_potential_energy()))

energy = -14.69111507 eV energy = -14.77117554 eV

3.3.2 Visualizing electron density

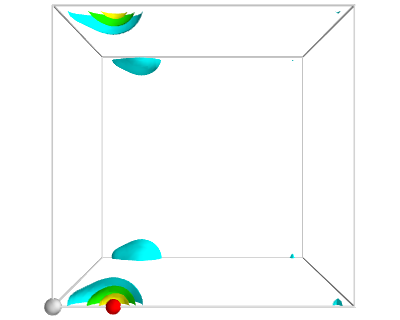

The electron density is a 3\(d\) quantity: for every \((x,y,z)\) point, there is a charge density. That means we need 4 numbers for each point: \((x,y,z)\) and \(\rho(x,y,z)\). Below we show an example (Figure fig:cd1) of plotting the charge density, and we consider some issues we have to consider when visualizing volumetric data in unit cells with periodic boundary conditions. We will use the results from a previous calculation.

from vasp import Vasp from enthought.mayavi import mlab from ase.data import vdw_radii from ase.data.colors import cpk_colors calc = Vasp('molecules/simple-co') calc.clone('molecules/co-chg') calc.set(lcharg=True) calc.stop_if(calc.potential_energy is None) atoms = calc.get_atoms() x, y, z, cd = calc.get_charge_density() # make a white figure mlab.figure(1, bgcolor=(1, 1, 1)) # plot the atoms as spheres for atom in atoms: mlab.points3d(atom.x, atom.y, atom.z, #this determines the size of the atom scale_factor=vdw_radii[atom.number] / 5., resolution=20, # a tuple is required for the color color=tuple(cpk_colors[atom.number]), scale_mode='none') # draw the unit cell - there are 8 corners, and 12 connections a1, a2, a3 = atoms.get_cell() origin = [0, 0, 0] cell_matrix = [[origin, a1], [origin, a2], [origin, a3], [a1, a1 + a2], [a1, a1 + a3], [a2, a2 + a1], [a2, a2 + a3], [a3, a1 + a3], [a3, a2 + a3], [a1 + a2, a1 + a2 + a3], [a2 + a3, a1 + a2 + a3], [a1 + a3, a1 + a3 + a2]] for p1, p2 in cell_matrix: mlab.plot3d([p1[0], p2[0]], # x-positions [p1[1], p2[1]], # y-positions [p1[2], p2[2]], # z-positions tube_radius=0.02) # Now plot the charge density mlab.contour3d(x, y, z, cd) mlab.view(azimuth=-90, elevation=90, distance='auto') mlab.savefig('images/co-cd.png')

Figure 13: Charge density of a CO molecule that is located at the origin. The electron density that is outside the cell is wrapped around to the other corners. \label{fig:cd1}

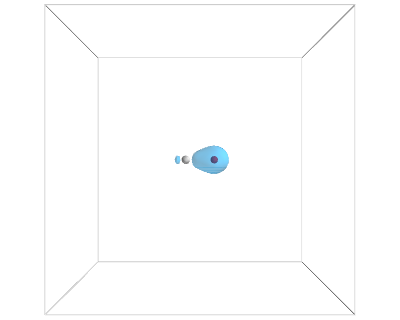

If we take care to center the CO molecule in the unit cell, we get a nicer looking result.

from vasp import Vasp from enthought.mayavi import mlab from ase.data import vdw_radii from ase.data.colors import cpk_colors from ase import Atom, Atoms atoms = Atoms([Atom('C', [2.422, 0.0, 0.0]), Atom('O', [3.578, 0.0, 0.0])], cell=(10,10,10)) atoms.center() calc = Vasp('molecules/co-centered', encut=350, xc='PBE', atoms=atoms) calc.set(lcharg=True,) calc.stop_if(calc.potential_energy is None) atoms = calc.get_atoms() x, y, z, cd = calc.get_charge_density() mlab.figure(bgcolor=(1, 1, 1)) # plot the atoms as spheres for atom in atoms: mlab.points3d(atom.x, atom.y, atom.z, scale_factor=vdw_radii[atom.number]/5, resolution=20, # a tuple is required for the color color=tuple(cpk_colors[atom.number]), scale_mode='none') # draw the unit cell - there are 8 corners, and 12 connections a1, a2, a3 = atoms.get_cell() origin = [0, 0, 0] cell_matrix = [[origin, a1], [origin, a2], [origin, a3], [a1, a1 + a2], [a1, a1 + a3], [a2, a2 + a1], [a2, a2 + a3], [a3, a1 + a3], [a3, a2 + a3], [a1 + a2, a1 + a2 + a3], [a2 + a3, a1 + a2 + a3], [a1 + a3, a1 + a3 + a2]] for p1, p2 in cell_matrix: mlab.plot3d([p1[0], p2[0]], # x-positions [p1[1], p2[1]], # y-positions [p1[2], p2[2]], # z-positions tube_radius=0.02) # Now plot the charge density mlab.contour3d(x, y, z, cd, transparent=True) # this view was empirically found by iteration mlab.view(azimuth=-90, elevation=90, distance='auto') mlab.savefig('images/co-centered-cd.png') mlab.show()

Figure 14: Charge density of a CO molecule centered in the unit cell. Now the electron density is centered in the unit cell. \label{fig:cd2}

TODO: how to make this figure

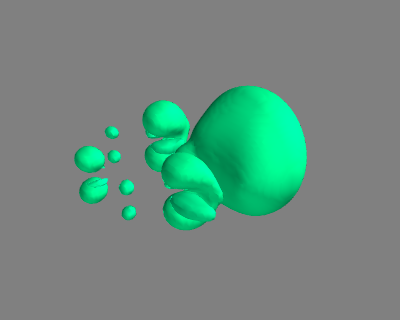

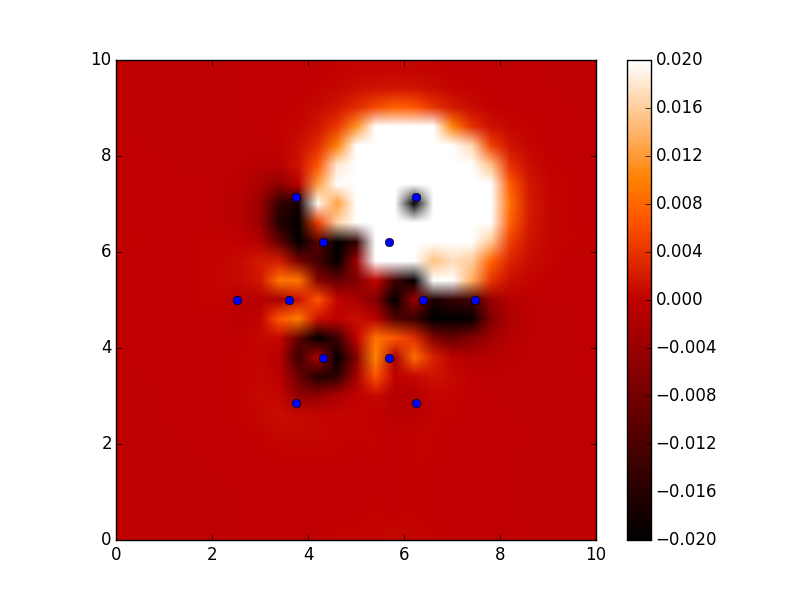

3.3.3 Visualizing electron density differences

Here, we visualize how charge moves in a benzene ring when you substitute an H atom with an electronegative Cl atom.

#!/usr/bin/env python from ase import * from ase.structure import molecule from vasp import Vasp ### Setup calculators benzene = molecule('C6H6') benzene.set_cell([10, 10, 10]) benzene.center() calc1 = Vasp('molecules/benzene', xc='PBE', nbands=18, encut=350, atoms=benzene) calc1.set(lcharg=True) chlorobenzene = molecule('C6H6') chlorobenzene.set_cell([10, 10, 10]) chlorobenzene.center() chlorobenzene[11].symbol ='Cl' calc2 = Vasp('molecules/chlorobenzene', xc='PBE', nbands=22, encut=350, atoms=chlorobenzene) calc2.set(lcharg=True) calc2.stop_if(None in (calc1.potential_energy, calc2.potential_energy)) x1, y1, z1, cd1 = calc1.get_charge_density() x2, y2, z2, cd2 = calc2.get_charge_density() cdiff = cd2 - cd1 print(cdiff.min(), cdiff.max()) ########################################## ##### set up visualization of charge difference from enthought.mayavi import mlab mlab.contour3d(x1, y1, z1, cdiff, contours=[-0.02, 0.02]) mlab.savefig('images/cdiff.png')

(-2.0821159999999987, 2.9688999999999979)

#!/usr/bin/env python from ase import * from ase.structure import molecule from vasp import Vasp import bisect import numpy as np def vinterp3d(x, y, z, u, xi, yi, zi): "Interpolate the point (xi, yi, zi) from the values at u(x, y, z)" p = np.array([xi, yi, zi]) #1D arrays of coordinates xv = x[:, 0, 0] yv = y[0, :, 0] zv = z[0, 0, :] # we subtract 1 because bisect tells us where to insert the # element to maintain an ordered list, so we want the index to the # left of that point i = bisect.bisect_right(xv, xi) - 1 j = bisect.bisect_right(yv, yi) - 1 k = bisect.bisect_right(zv, zi) - 1 if i == len(x) - 1: i = len(x) - 2 elif i < 0: i = 0 if j == len(y) - 1: j = len(y) - 2 elif j < 0: j = 0 if k == len(z) - 1: k = len(z) - 2 elif k < 0: k = 0 # points at edge of cell. We only need P1, P2, P3, and P5 P1 = np.array([x[i, j, k], y[i, j, k], z[i, j, k]]) P2 = np.array([x[i + 1, j, k], y[i + 1, j, k], z[i + 1, j, k]]) P3 = np.array([x[i, j + 1, k], y[i, j + 1, k], z[i, j + 1, k]]) P5 = np.array([x[i, j, k + 1], y[i, j, k + 1], z[i, j, k + 1]]) # values of u at edge of cell u1 = u[i, j, k] u2 = u[i+1, j, k] u3 = u[i, j+1, k] u4 = u[i+1, j+1, k] u5 = u[i, j, k+1] u6 = u[i+1, j, k+1] u7 = u[i, j+1, k+1] u8 = u[i+1, j+1, k+1] # cell basis vectors, not the unit cell, but the voxel cell containing the point cbasis = np.array([P2 - P1, P3 - P1, P5 - P1]) # now get interpolated point in terms of the cell basis s = np.dot(np.linalg.inv(cbasis.T), np.array([xi, yi, zi]) - P1) # now s = (sa, sb, sc) which are fractional coordinates in the vector space # next we do the interpolations ui1 = u1 + s[0] * (u2 - u1) ui2 = u3 + s[0] * (u4 - u3) ui3 = u5 + s[0] * (u6 - u5) ui4 = u7 + s[0] * (u8 - u7) ui5 = ui1 + s[1] * (ui2 - ui1) ui6 = ui3 + s[1] * (ui4 - ui3) ui7 = ui5 + s[2] * (ui6 - ui5) return ui7 ### Setup calculators calc = Vasp('molecules/benzene') benzene = calc.get_atoms() x1, y1, z1, cd1 = calc.get_charge_density() calc = Vasp('molecules/chlorobenzene') x2, y2, z2, cd2 = calc.get_charge_density() cdiff = cd2 - cd1 #we need the x-y plane at z=5 import matplotlib.pyplot as plt from scipy import mgrid X, Y = mgrid[0: 10: 25j, 0: 10: 25j] cdiff_plane = np.zeros(X.shape) ni, nj = X.shape for i in range(ni): for j in range(nj): cdiff_plane[i, j] = vinterp3d(x1, y1, z1, cdiff, X[i, j], Y[i, j], 5.0) plt.imshow(cdiff_plane.T, vmin=-0.02, # min charge diff to plot vmax=0.02, # max charge diff to plot cmap=plt.cm.gist_heat, # colormap extent=(0., 10., 0., 10.)) # axes limits # plot atom positions. It is a little tricky to see why we reverse the x and y coordinates. That is because imshow does that. x = [a.x for a in benzene] y = [a.y for a in benzene] plt.plot(x, y, 'bo') plt.colorbar() #add colorbar plt.savefig('images/cdiff-imshow.png') plt.show()

3.3.4 Dipole moments

dipole moment The dipole moment is a vector describing the separation of electrical (negative) and nuclear (positive) charge. The magnitude of this vector is the dipole moment, which has units of Coulomb-meter, or more commonly Debye. The symmetry of a molecule determines if a molecule has a dipole moment or not. Below we compute the dipole moment of CO. We must integrate the electron density to find the center of electrical charge, and compute a sum over the nuclei to find the center of positive charge.

from vasp import Vasp from vasp.VaspChargeDensity import VaspChargeDensity import numpy as np from ase.units import Debye import os calc = Vasp('molecules/co-centered') atoms = calc.get_atoms() calc.stop_if(atoms.get_potential_energy() is None) vcd = VaspChargeDensity('molecules/co-centered/CHG') cd = np.array(vcd.chg[0]) n0, n1, n2 = cd.shape s0 = 1.0 / n0 s1 = 1.0 / n1 s2 = 1.0 / n2 X, Y, Z = np.mgrid[0.0:1.0:s0, 0.0:1.0:s1, 0.0:1.0:s2] C = np.column_stack([X.ravel(), Y.ravel(), Z.ravel()]) atoms = calc.get_atoms() uc = atoms.get_cell() real = np.dot(C, uc) # now convert arrays back to unitcell shape x = np.reshape(real[:, 0], (n0, n1, n2)) y = np.reshape(real[:, 1], (n0, n1, n2)) z = np.reshape(real[:, 2], (n0, n1, n2)) nelements = n0 * n1 * n2 voxel_volume = atoms.get_volume() / nelements total_electron_charge = -cd.sum() * voxel_volume electron_density_center = np.array([(cd * x).sum(), (cd * y).sum(), (cd * z).sum()]) electron_density_center *= voxel_volume electron_density_center /= total_electron_charge electron_dipole_moment = -electron_density_center * total_electron_charge # now the ion charge center. We only need the Zval listed in the potcar from vasp.POTCAR import get_ZVAL LOP = calc.get_pseudopotentials() ppp = os.environ['VASP_PP_PATH'] zval = {} for sym, ppath, hash in LOP: fullpath = os.path.join(ppp, ppath) z = get_ZVAL(fullpath) zval[sym] = z ion_charge_center = np.array([0.0, 0.0, 0.0]) total_ion_charge = 0.0 for atom in atoms: Z = zval[atom.symbol] total_ion_charge += Z pos = atom.position ion_charge_center += Z * pos ion_charge_center /= total_ion_charge ion_dipole_moment = ion_charge_center * total_ion_charge dipole_vector = (ion_dipole_moment + electron_dipole_moment) dipole_moment = ((dipole_vector**2).sum())**0.5 / Debye print('The dipole vector is {0}'.format(dipole_vector)) print('The dipole moment is {0:1.2f} Debye'.format(dipole_moment))

The dipole vector is [ 0.02048406 0.00026357 0.00026357] The dipole moment is 0.10 Debye

Note that a function using the code above exists in vasp which makes it trivial to compute the dipole moment. Here is an example of its usage.

from vasp import Vasp from ase.units import Debye calc = Vasp('molecules/co-centered') dipole_moment = calc.get_dipole_moment() print('The dipole moment is {0:1.2f} Debye'.format(dipole_moment))

The dipole moment is 0.10 Debye

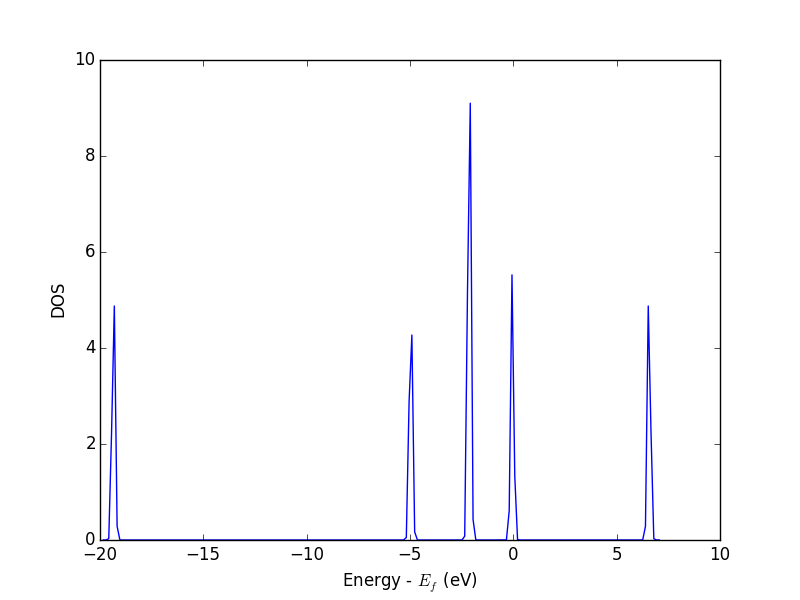

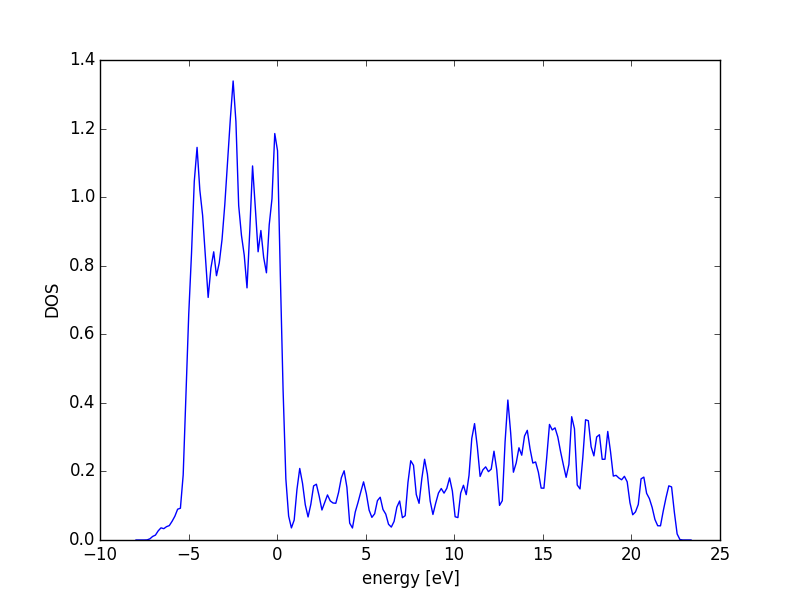

3.3.5 The density of states (DOS)

The density of states (DOS) gives you the number of electronic states (i.e., the orbitals) that have a particular energy. We can get this information from the last calculation we just ran without having to run another DFT calculation.

from vasp import Vasp from ase.dft.dos import DOS import matplotlib.pyplot as plt calc = Vasp('molecules/simple-co') # we already ran this! dos = DOS(calc) plt.plot(dos.get_energies(), dos.get_dos()) plt.xlabel('Energy - $E_f$ (eV)') plt.ylabel('DOS') # make sure you save the figure outside the with statement, or provide # the correct relative or absolute path to where you want it. plt.savefig('images/co-dos.png')

Figure 17: Density of states for a CO molecule.

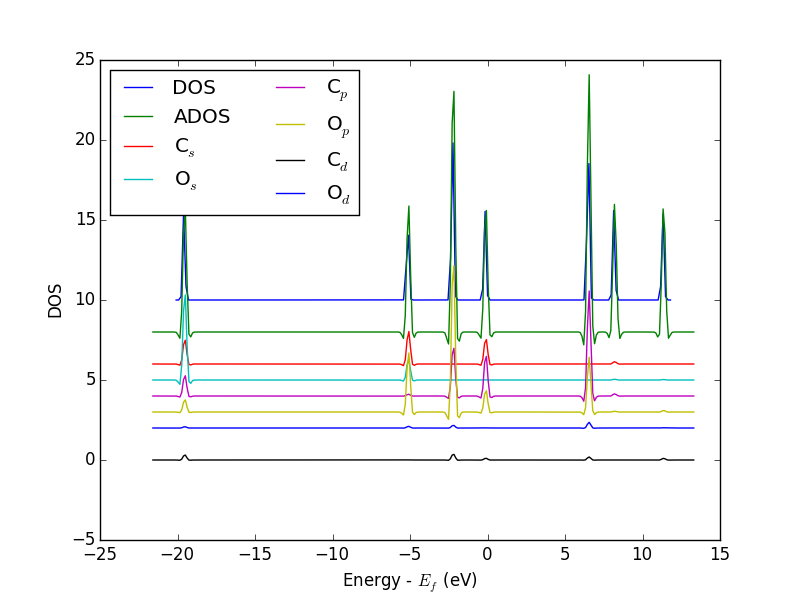

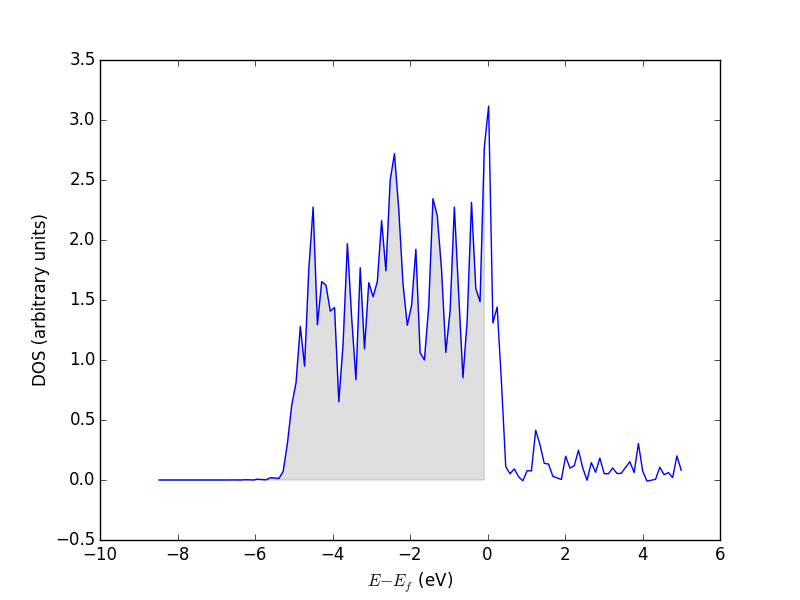

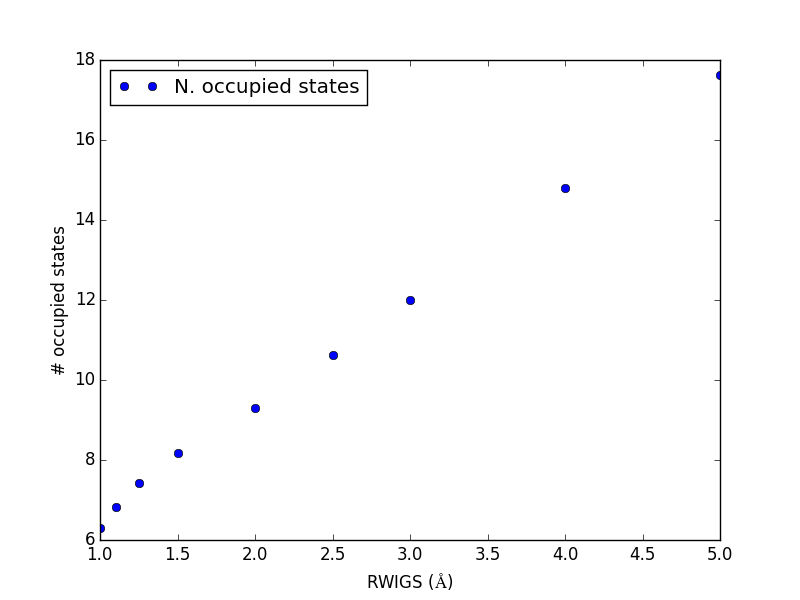

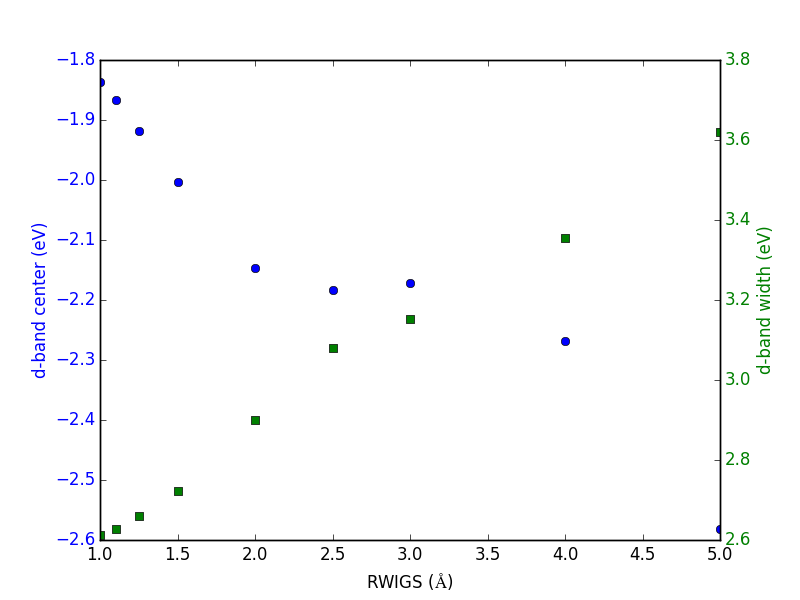

3.3.6 Atom-projected density of states on molecules

Let us consider which states in the density of states belong to which atoms in a molecule. This can only be a qualitative consideration because the orbitals on the atoms often hybridize to form molecular orbitals, e.g. in methane the \(s\) and \(p\) orbitals can form what we call \(sp^3\) orbitals. We can compute atom-projected density of states in VASP, which is done by projecting the wave function onto localized atomic orbitals. Here is an example. We will consider the CO molecule. To get atom-projected density of states, we must set RWIGS for each atom. This parameter defines the radius of the sphere around the atom which cuts off the projection. The total density of states and projected density of states information comes from the DOSCAR file.

Note that unlike the DOS, here we must run another calculation because we did not specify the atom-projected keywords above. Our strategy is to get the atoms from the previous calculation, and use them in a new calculation. You could redo the calculation in the same directory, but you risk losing the results of the first step. That can make it difficult to reproduce a result. We advocate our approach of using multiple directories for the subsequent calculations, because it leaves a clear trail of how the work was done.

The RWIGS is not uniquely determined for an element. There are various natural choices, e.g. the ionic radius of an atom, or a value that minimizes overlap of neighboring spheres, but these values can change slightly in different environments.

You can also get spin-polarized atom-projected DOS, and magnetization projected DOS. See http://cms.mpi.univie.ac.at/vasp/vasp/DOSCAR_file.html#doscar for more details.

from vasp import Vasp from ase.dft.dos import DOS import matplotlib.pyplot as plt # get the geometry from another calculation calc = Vasp('molecules/simple-co') atoms = calc.get_atoms() calc = Vasp('molecules/co-ados', encut=300, xc='PBE', rwigs={'C': 1.0, 'O': 1.0}, # these are the cutoff radii for projected states atoms=atoms) calc.stop_if(calc.potential_energy is None) # now get results dos = DOS(calc) plt.plot(dos.get_energies(), dos.get_dos() + 10) energies, c_s = calc.get_ados(0, 's') _, c_p = calc.get_ados(0, 'p') _, o_s = calc.get_ados(1, 's') _, o_p = calc.get_ados(1, 'p') _, c_d = calc.get_ados(0, 'd') _, o_d = calc.get_ados(1, 'd') plt.plot(energies, c_s + 6, energies, o_s + 5) plt.plot(energies, c_p + 4, energies, o_p + 3) plt.plot(energies, c_d, energies, o_d + 2) plt.xlabel('Energy - $E_f$ (eV)') plt.ylabel('DOS') plt.legend(['DOS', 'C$_s$', 'O$_s$', 'C$_p$', 'O$_p$', 'C$_d$', 'O$_d$'], ncol=2, loc='best') plt.savefig('images/co-ados.png')

Figure 18: Atom-projected DOS for a CO molecule. The total density of states and the \(s\), \(p\) and \(d\) states on the C and O are shown.

3.3.7 Electrostatic potential

This is an example of the so-called σ hole in a halogen bond. The coordinates for the CF3Br molecule were found at http://cccbdb.nist.gov/exp2.asp?casno=75638.

from vasp import Vasp from ase import Atom, Atoms from ase.io import write from enthought.mayavi import mlab from ase.data import vdw_radii from ase.data.colors import cpk_colors atoms = Atoms([Atom('C', [ 0.0000, 0.0000, -0.8088]), Atom('Br', [ 0.0000, 0.0000, 1.1146]), Atom('F', [ 0.0000, 1.2455, -1.2651]), Atom('F', [ 1.0787, -0.6228, -1.2651]), Atom('F', [-1.0787, -0.6228, -1.2651])], cell=(10, 10, 10)) atoms.center() calc = Vasp('molecules/CF3Br', encut=350, xc='PBE', ibrion=1, nsw=50, lcharg=True, lvtot=True, lvhar=True, atoms=atoms) calc.set_nbands(f=2) calc.stop_if(calc.potential_energy is None) x, y, z, lp = calc.get_local_potential() x, y, z, cd = calc.get_charge_density() mlab.figure(1, bgcolor=(1, 1, 1)) # make a white figure # plot the atoms as spheres for atom in atoms: mlab.points3d(atom.x, atom.y, atom.z, scale_factor=vdw_radii[atom.number]/5., resolution=20, # a tuple is required for the color color=tuple(cpk_colors[atom.number]), scale_mode='none') # plot the bonds. We want a line from C-Br, C-F, etc... # We create a bond matrix showing which atoms are connected. bond_matrix = [[0, 1], [0, 2], [0, 3], [0, 4]] for a1, a2 in bond_matrix: mlab.plot3d(atoms.positions[[a1,a2], 0], # x-positions atoms.positions[[a1,a2], 1], # y-positions atoms.positions[[a1,a2], 2], # z-positions [2, 2], tube_radius=0.02, colormap='Reds') mlab.contour3d(x, y, z, lp) mlab.savefig('images/halogen-ep.png') mlab.show()

Figure 19: Plot of the electrostatic potential of CF3Br. TODO: figure out how to do an isosurface of charge, colormapped by the local potential.

See http://www.uni-due.de/~hp0058/?file=manual03.html&dir=vmdplugins for examples of using VMD for visualization.

3.3.8 Bader analysis

Note: Thanks to @prtkm for helping improve this section (https://github.com/jkitchin/dft-book/issues/2).

Bader analysis is a charge partitioning scheme where charge is divided by surfaces of zero flux that define atomic basins of charge. The most modern way of calculating the Bader charges is using the bader program from Graeme Henkelmen's group Henkelman2006354,doi.10.1021/ct100125x. Let us consider a water molecule, centered in a box. The strategy is first to run the calculation, then run the bader program on the results.

We have to specify laechg to be true so that the all-electron core charges will be written out to files. Here we setup and run the calculation to get the densities first.

from vasp import Vasp from ase.structure import molecule atoms = molecule('H2O') atoms.center(vacuum=6) calc = Vasp('molecules/h2o-bader', xc='PBE', encut=350, lcharg=True, laechg=True, atoms=atoms) print calc.potential_energy

-14.22250648

Now that the calculation is done, get the bader code and scripts from http://theory.cm.utexas.edu/henkelman/code/bader/.

We use this code to see the changes in charges on the atoms.

from vasp import Vasp calc = Vasp('molecules/h2o-bader') calc.bader(ref=True, overwrite=True) atoms = calc.get_atoms() for atom in atoms: print('|{0} | {1} |'.format(atom.symbol, atom.charge))

|O | -1.2326 | |H | 0.6161 | |H | 0.6165 |

The results above are comparable to those from gpaw at https://wiki.fysik.dtu.dk/gpaw/tutorials/bader/bader.html.

You can see some charge has been "transferred" from H to O.

3.4 Geometry optimization

3.4.1 Manual determination of a bond length

The equilibrium bond length of a CO molecule is approximately the bond length that minimizes the total energy. We can find that by computing the total energy as a function of bond length, and noting where the minimum is. Here is an example in VASP. There are a few features to point out here. We want to compute 5 bond lengths, and each calculation is independent of all the others. vasp is set up to automatically handle jobs for you by submitting them to the queue. It raises a variety of exceptions to let you know what has happened, and you must handle these to control the workflow. We will illustrate this by the following examples.

from vasp import Vasp from ase import Atom, Atoms bond_lengths = [1.05, 1.1, 1.15, 1.2, 1.25] energies = [] for d in bond_lengths: # possible bond lengths co = Atoms([Atom('C', [0, 0, 0]), Atom('O', [d, 0, 0])], cell=(6, 6, 6)) calc = Vasp('molecules/co-{0}'.format(d), # output dir xc='PBE', nbands=6, encut=350, ismear=1, sigma=0.01, atoms=co) energies.append(co.get_potential_energy()) print('d = {0:1.2f} ang'.format(d)) print('energy = {0:1.3f} eV'.format(energies[-1] or 0)) print('forces = (eV/ang)\n {0}'.format(co.get_forces())) print('') # blank line if None in energies: calc.abort() else: import matplotlib.pyplot as plt plt.plot(bond_lengths, energies, 'bo-') plt.xlabel(r'Bond length ($\AA$)') plt.ylabel('Total energy (eV)') plt.savefig('images/co-bondlengths.png')

d = 1.05 ang energy = -14.216 eV forces = (eV/ang) [[-14.93017486 0. 0. ] [ 14.93017486 0. 0. ]] d = 1.10 ang energy = -14.722 eV forces = (eV/ang) [[-5.81988086 0. 0. ] [ 5.81988086 0. 0. ]] d = 1.15 ang energy = -14.841 eV forces = (eV/ang) [[ 0.63231023 0. 0. ] [-0.63231023 0. 0. ]] d = 1.20 ang energy = -14.691 eV forces = (eV/ang) [[ 5.09138064 0. 0. ] [-5.09138064 0. 0. ]] d = 1.25 ang energy = -14.355 eV forces = (eV/ang) [[ 8.14027842 0. 0. ] [-8.14027842 0. 0. ]]

Before continuing, it is worth looking at some other approaches to setup and run these calculations. Here we consider a functional approach that uses list comprehensions pretty extensively.

from vasp import Vasp from ase import Atom, Atoms bond_lengths = [1.05, 1.1, 1.15, 1.2, 1.25] ATOMS = [Atoms([Atom('C', [0, 0, 0]), Atom('O', [d, 0, 0])], cell=(6, 6, 6)) for d in bond_lengths] calcs = [Vasp('molecules/co-{0}'.format(d), # output dir xc='PBE', nbands=6, encut=350, ismear=1, sigma=0.01, atoms=atoms) for d, atoms in zip(bond_lengths, ATOMS)] energies = [atoms.get_potential_energy() for atoms in ATOMS] print(energies)

[-14.21584765, -14.72174343, -14.84115208, -14.69111507, -14.35508371]

We can retrieve data similarly.

from vasp import Vasp bond_lengths = [1.05, 1.1, 1.15, 1.2, 1.25] calcs = [Vasp('molecules/co-{0}'.format(d)) for d in bond_lengths] energies = [calc.get_atoms().get_potential_energy() for calc in calcs] print(energies)

[-14.21584765, -14.72174343, -14.84115208, -14.69111507, -14.35508371]

from vasp import Vasp from ase.db import connect bond_lengths = [1.05, 1.1, 1.15, 1.2, 1.25] calcs = [Vasp('molecules/co-{0}'.format(d)) for d in bond_lengths] con = connect('co-database.db', append=False) for atoms in [calc.get_atoms() for calc in calcs]: con.write(atoms)

Here we just show that there are entries in our database. If you run the code above many times, each time will add new entries.

ase-db co-database.db

id|age|user |formula|calculator| energy| fmax|pbc| volume|charge| mass| smax|magmom 1|12s|jkitchin|CO |vasp |-14.216|14.930|TTT|216.000| 0.000|28.010|0.060| 0.000 2|10s|jkitchin|CO |vasp |-14.722| 5.820|TTT|216.000| 0.000|28.010|0.017| 0.000 3| 9s|jkitchin|CO |vasp |-14.841| 0.632|TTT|216.000| 0.000|28.010|0.017| 0.000 4| 9s|jkitchin|CO |vasp |-14.691| 5.091|TTT|216.000| 0.000|28.010|0.041| 0.000 5| 7s|jkitchin|CO |vasp |-14.355| 8.140|TTT|216.000| 0.000|28.010|0.060| 0.000 Rows: 5

This database is now readable in Python too. Here we read in all the results. Later we will learn how to select specific entries.

from ase.io import read ATOMS = read('co-database.db', ':') print([a[0].x - a[1].x for a in ATOMS]) print([atoms.get_potential_energy() for atoms in ATOMS])

[-1.0499999999999998, -1.09999998, -1.15000002, -1.2000000000000002, -1.2499999800000001] [-14.21584765, -14.72174343, -14.84115208, -14.69111507, -14.35508371]

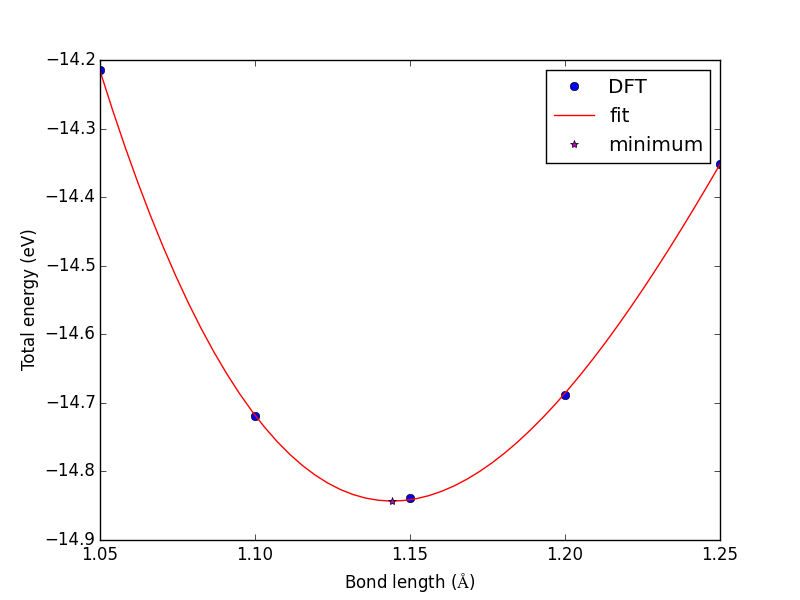

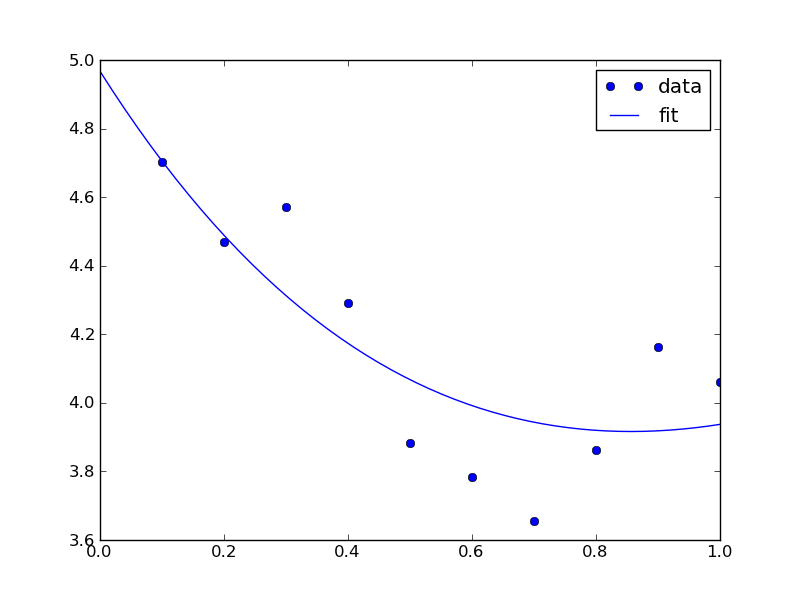

Now, back to the goal of finding the minimum. To find the minimum we could run more calculations, but a simpler and faster way is to fit a polynomial to the data, and find the analytical minimum. The results are shown in Figure fig:co-bondlengths.

from vasp import Vasp import numpy as np import matplotlib.pyplot as plt bond_lengths = [1.05, 1.1, 1.15, 1.2, 1.25] energies = [] for d in bond_lengths: # possible bond lengths calc = Vasp('molecules/co-{0}'.format(d)) atoms = calc.get_atoms() energies.append(atoms.get_potential_energy()) # Now we fit an equation - cubic polynomial pp = np.polyfit(bond_lengths, energies, 3) dp = np.polyder(pp) # first derivative - quadratic # we expect two roots from the quadratic eqn. These are where the # first derivative is equal to zero. roots = np.roots(dp) # The minimum is where the second derivative is positive. dpp = np.polyder(dp) # second derivative - line secd = np.polyval(dpp, roots) minV = roots[secd > 0] minE = np.polyval(pp, minV) print('The minimum energy is {0[0]} eV at V = {1[0]} Ang^3'.format(minE, minV)) # plot the fit x = np.linspace(1.05, 1.25) fit = np.polyval(pp, x) plt.plot(bond_lengths, energies, 'bo ') plt.plot(x, fit, 'r-') plt.plot(minV, minE, 'm* ') plt.legend(['DFT', 'fit', 'minimum'], numpoints=1) plt.xlabel(r'Bond length ($\AA$)') plt.ylabel('Total energy (eV)') plt.savefig('images/co-bondlengths.png')

The minimum energy is -14.8458440947 eV at V = 1.14437582331 Ang^3

Figure 21: Energy vs CO bond length. \label{fig:co-bondlengths}

3.4.2 Automatic geometry optimization with VASP

It is generally the case that the equilibrium geometry of a system is the one that minimizes the total energy and forces. Since each atom has three degrees of freedom, you can quickly get a high dimensional optimization problem. Luckily, VASP has built-in geometry optimization using the IBRION and NSW tags. Here we compute the bond length for a CO molecule, letting VASP do the geometry optimization for us.

Here are the most common choices for IBRION.

| IBRION value | algorithm |

|---|---|

| 1 | quasi-Newton (use if initial guess is good) |

| 2 | conjugate gradient |

VASP applies a criteria for stopping a geometry optimization. When the change in energy between two steps is less than 0.001 eV (or 10*EDIFF), the relaxation is stopped. This criteria is controlled by the EDIFFG tag. If you prefer to stop based on forces, set EDIFFG=-0.05, i.e. to a negative number. The units of force is eV/Å. For most work, a force tolerance of 0.05 eV/Å is usually sufficient.